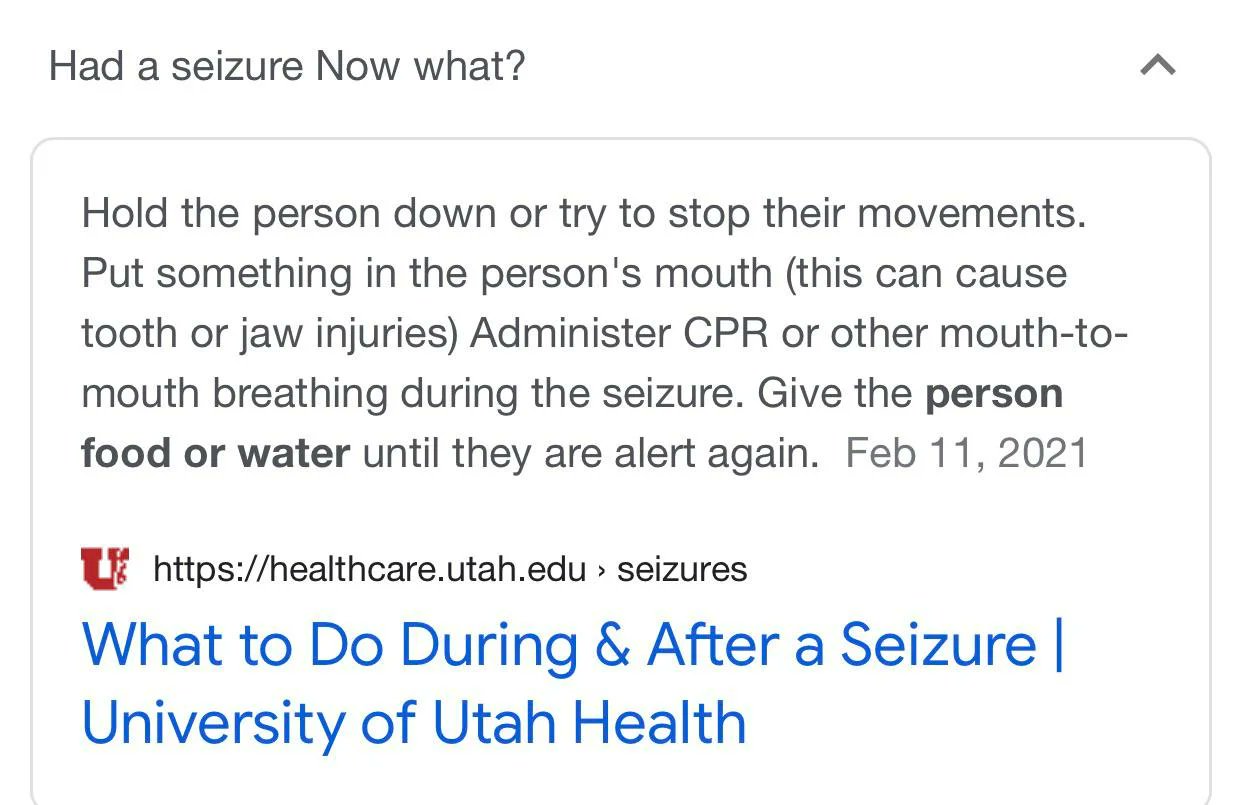

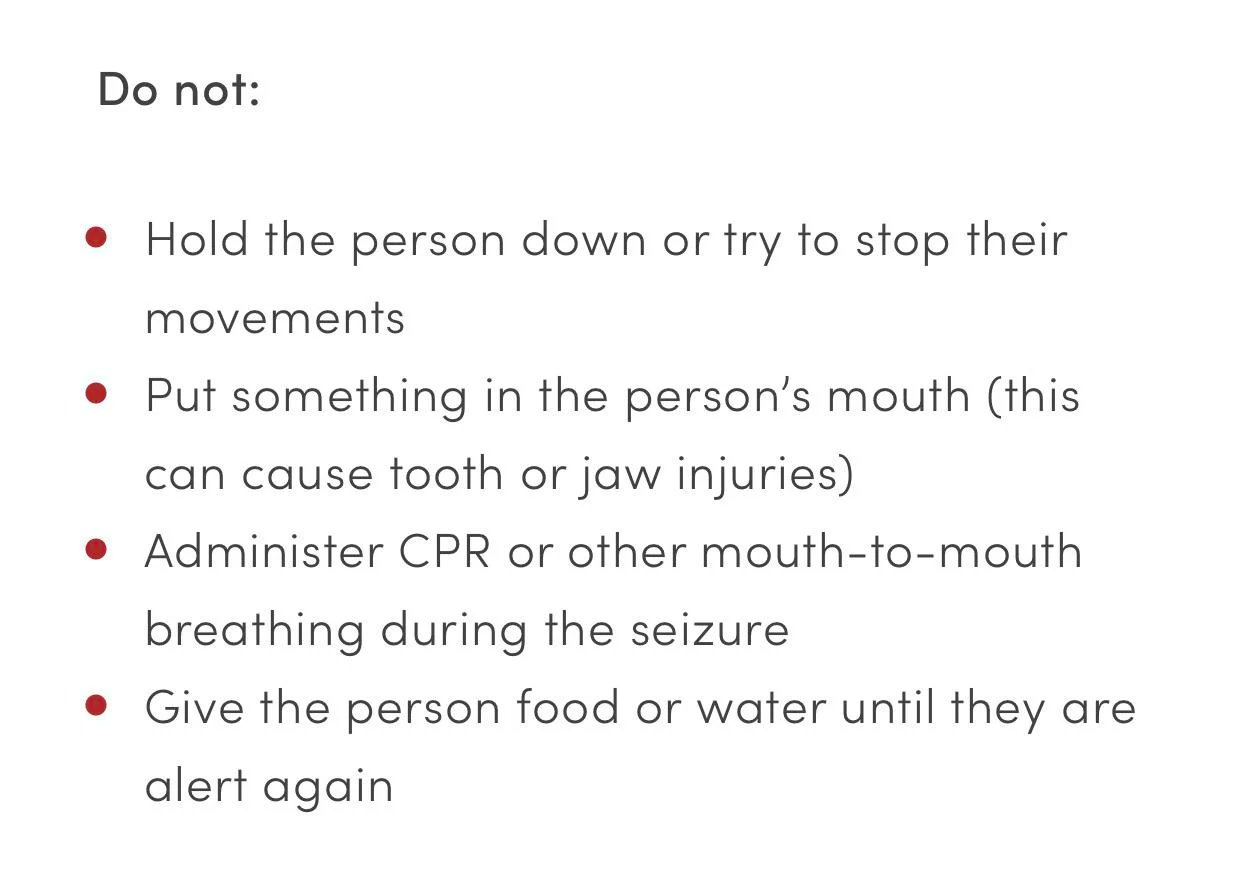

Wait, what's that? That last one wasn't funny, you say? Did we just run face-first toward the cold brick wall of reality, where bad information means people die? Well, sorry. Because it's not the first time Google gave out fatal advice, nor the last. Nor is there any end in sight. Whoops! ## The Semantic Search So, quick background. These direct query responses -- a kind of semantic search -- are what Google calls [Featured Snippets][FeaturedSnippets]. Compared to traditional indexing, semantic search is actually a relative newcomer to web search technology. In 2009 [Wolfram launched Wolfram\|Alpha][wolfram-launch], a website that, instead of searching indexed web pages, promised to answer plain-english queries with *computational knowledge*: real, scientific data backed by scientific sources. Wolfram was a pioneer in the field, but other companies were quick to see the value in what *Semantic Search*, or we might now call responses. Right on the heels of Wolfram\|Alpha, Microsoft replaced Live Search with Bing.com, which was what they called a "Decision Engine." In the [2009 Bing.com press release][bing-decisions], CEO Steve Ballmer describes the motivation behind the focus on direct responses: > Today, search engines do a decent job of helping people navigate the Web and find information, but they don't do a very good job of enabling people to use the information they find. ... Bing is an important first step forward in our long-term effort to deliver innovations in search that enable people to find information quickly and use the information they've found to accomplish tasks and make smart decisions.  Today, Google has this video showing the evolution of the search engine, starting with the original logo and a plain index of websites, and ending with a modern-day search page, complete with contextual elements like photos, locations, user reviews, and definitions. You know, what we're familiar with today. Google [describes its goal for search][howsearchworks-ourapproach] as being to "deliver the most relevant and reliable information available" and to "make \[the world's information] universally accessible and useful". Google is particularly proud of [featured snippets and responses][howsearchworks-responses], and paraded them out in Hashtag Search On 21':  One contradiction stands out to me here: at 00:45, a Google spokesperson uses "traffic Google sent to the open web" as a metric Google is proud of. But, of course, featured snippets are a step away from that; they answer questions quickly, negating the need for someone to visit a site, and instead keeping them on a Google-controlled page. This has been a trend with new Google tech, the most obvious example being AMP [(which is bad)][eff-amp]. This seems subtle, but it really is a fundamental shift in the dynamic. As Google moves more and more content into its site, it stops being an index of sources of information and starts asserting information itself. It's the Wikipedia-as-a-source problem; "Google says" isn't enough, can't be enough. ::: aside tangent Hey, [remember when there was a whole panic about RSS](/blog/2021/10/17/the-joy-of-rss/), because people were afraid of aggregators scooping up content and serving it on their own sites without compensating the authors or contributing to their revenue with hits? Where did they go? Because that's *actually* what this is, as opposed to RSS, where that did not happen. ## Google isn't good at search But for all Google's talk about providing good information ond preventing spam, Google search results (and search results in general) are actually very bad at present. Most queries about specific questions result in either content scraped from other sites or just a [slurry of](https://twitter.com/eevee/status/1466974002576986119?s=20) [SEO mush](https://twitter.com/eevee/status/1466653037775110145), the aesthetics of "information" with none of the substance. (For my non-technical friends, see the [recipe blog problem][RecipeBlogProblem].) As I've said before, there needs to be a manual flag Google puts on authoritative sources. It's shameful how effective spam websites are on technical content. Official manuals on known, trusted domains should rise to the top of results. This doesn't solve the semantic search problem, but it's an obvious remedy to an obvious problem, and it's frankly shameful that Google and others haven't been doing it for years already. I saw Michael Seibel recently sum up the current state of affairs: > [Michael Seibel (@mwseibel)](https://twitter.com/mwseibel/status/1477701120319361026): > A recent small medical issue has highlighted how much someone needs to disrupt Google Search. Google is no longer producing high quality search results in a significant number of important categories. > > Health, product reviews, recipes are three categories I searched today where top results featured clickbait sites riddled with crappy ads. I'm sure there are many more. Feel free to reply to the thread with the categories where you no longer trust Google Search results. > > I'm pretty sure the engineers responsible for Google Search aren't happy about the quality of results either. I'm wondering if this isn't really a tech problem but the influence of some suit responsible for quarterly ad revenue increases. > > ... > > The more I think about this, the more it looks like classic short term thinking. Juice ad revenue in the short run. Open the door to complete disruption in the long run… ## Trying to use algorithms to solve human problems But I'm not going to be *too* harsh on Google's sourcing algorithm. It's probably a very good information sourcing algorithm. The problem is that even a very good information sourcing algorithm *can't possibly work* in anything approaching fit-for-purpose here. The task here is "process this collection of information and determine both which sources are correct and credible *and* what those sources' intended meanings are." This isn't an algorithmic problem. Even just half of that task -- "understanding intended meaning" -- is not only not something computers are equipped to do but isn't even something humans are good at! By its very nature Google can't help but "take everything it reads on the internet at face value" (which, for humans, is just basic operating knowledge). And so you get the garbage-in, garbage-out problem, at the very least. Google can't differentiate subculture context. Even people are bad at this! And, of course, Google can "believe" wrong information, or just [regurgitate terrible advice](https://twitter.com/lilsinnsitive/status/1472629416853389316).  But the problem is deeper than that, because the whole premise that all questions have single correct answers is wrong. There exists debate on points of fact. There exists debate on points of fact! We haven't solved information literacy yet, but we haven't solved information yet either. The interface that takes in questions and returns single correct answers doesn't just need a very good sourcing function, it's an impossible task to begin with. Not only can Google not automate information literacy, the fact that they're pretending they can is itself incredibly harmful. But the urge to solve genuinely difficult social, human problems with an extra layer of automation pervades tech culture. It essentially *is* tech culture. (Steam is one of the worst offenders.) And, of course, nobody in tech ever got promoted for replacing an algorithm with skilled human labor. Or even for pointing out that they were slapping an algorithm on an unfit problem. Everything pulls the other direction. ## Dangers of integrating these services into society Of course, there's a huge incentive to maximize the number of queries you can respond to. Some of that can be done with reliable data sourcing (like Wolfram\|Alpha does), but there are a lot of questions whose answers aren't in a feasible data set. And, [according to Google, 15% of daily searches are new queries that have never been made before][howsearchworks-ourapproach], so if your goal is to maximize how many questions you can answer (read: $$$), human curation isn't feasible either. But if you're Google, you've already got most of the internet indexed anyway. So... why not just pull from there? Well, I mean, *we* know why. The bad information, and the harm, and the causing of preventable deaths, and all that. And this bad information is at its worst when it's fed into personal assistants. Your Alexas, Siris, Cortanas all want to do exactly this: answer questions with actionable data. The problem is the human/computer interface is completely different with voice assistants than it is with traditional search. When you search and get a featured snippet, it's on a page with hundreds of other articles and a virtually limitless number of second opinions. The human has the agency to do their own research using the massively powerful tools at their disposal. Not so with a voice assistant. They have the opportunity to give zero-to-one answers, which you can either take or leave. You lose that ability to engage with the information or do any followup research, and so it becomes much, much more important for those answers to be good. And they're not. Let's revisit that "had a seizure, now what" question, but this time without the option to click through to the website to see context.  ***Oh no.*** And of course these aren't one-off problems, either. We see these stories regularly. Like just back in December, when [Amazon's Alexa told a 10 year old to electrocute herself on a wall outlet][AmazonsAlexaToldA10YearOldToElectrocute]. Or Google again, but this time [killing babies][KillingBabies]. ## The insufficient responses Let's pause here for a moment and look at the response to just one of these incidents: the seizure one. Google went with the only option they had (other than discontinuing the ill-conceived feature, of course): case-by-case moderation.  Now, just to immediately prove the point that case-by-case moderation can't deal with a fundamentally flawed problem like this, *they couldn't even fix the seizure answers*  See, it wasn't fixed by ensuring future summaries of life-critical information would be written and reviewed by humans, because that's a cost. A necessary cost for the feature, in this case, but that doesn't stop Google from being unwilling to pay it. And, of course, the only reason this got to a Google engineer at all is that the deadly advice *wasn't* followed, the person survived, and [the incident blew up in the news](https://twitter.com/JoBrodie/status/1449512503730483207). Even if humans *could* filter through the output of an algorithm that spits out bad information (and they can't), best-case scenario we have a system where Google only lies about *unpopular* topics. ## COVID testing And then we get to COVID. Now, with all the disinformation about COVID, sites like [Twitter](https://twitter.com/giovan_h/status/1480361844992888835) and [YouTube][fighting-misinformation] have taken manual steps to try to specifically provide good sources of information when people ask, which is probably a good thing. But even with those manual measures in place, when Joe Biden told people to "Google COVID test near me" in lieu of a national program, it raised eyebrows.  Now, apparently there was some effort to coordinate manually sourcing of reliable information for COVID testing, but it sounds like that might have some issues too:  So now, as [Kamala Harris scolds people for even asking about Google alternatives in an unimaginably condescending interview][KamalaHarrisScoldsPeopleForEven], we're back in the middle of it. People are going to use Google itself as the authoritative source of information, because the US Federal government literally gave that as the only option. And so there will be scams, and misinformation, and people will be hurt. But at least engineers at Google know about COVID. At least, on this topic, somebody somewhere is going to *try* to filter out the lies. For the infinitude of other questions you might have? You'll get an answer, but whether or not it kills you is still luck of the draw. ::: aside update Shortly after I published this article, the Biden administration announced a new public program allowing people to order free at-home testing from the post office, insteading of being left to the mercy of a Google search. My main takeaway from that is ***I won.*** # Related Reading - [Safiya Umoja Noble, "Algorithms of Oppression - How Search Engines Reinforce Racism"][SafiyaUmojaNobleAlgorithmsOfOppression]{: .related-reading} - [Ax Sharma, "Amazon Alexa slammed for giving lethal challenge to 10-year-old girl"][AmazonsAlexaToldA10YearOldToElectrocute]{: .related-reading} - [Alexa tells 10-year-old girl to touch live plug with penny - BBC News](https://www.bbc.com/news/technology-59810383){: .related-reading} - [Alexis Hancock, "Google's AMP, the Canonical Web, and the Importance of Web Standards"][eff-amp]{: .related-reading} - [Tobie Langel, re: Alexa bbc article](https://twitter.com/tobie/status/1476329689924911105?s=20&t=dkS4wX6PLllt8aNMtYPu_g){: .related-reading} - [Jon Porter, "Zelda recipe appears in serious novel by serious author after rushed Google search"][zelda-recipe]{: .related-reading} - [Breaking911, “VP Kamala Harris to Americans who can't find a COVID test: “Google It””][KamalaHarrisScoldsPeopleForEven]{: .related-reading} - [Reddit, "Do food bloggers realize how awful their recipe pages are?"][RecipeBlogProblem]{: .related-reading} - [Cory Doctorow, "Backdooring a summarizerbot to shape opinion"](https://pluralistic.net/2022/10/21/let-me-summarize/#i-read-the-abstract){: .related-reading} - [Microsoft, "Microsoft's New Search at Bing.com Helps People Make Better Decisions"][bing-decisions]{: .related-reading} - [Russell Foltz-Smith, "Reactions to Wolfram|Alpha from around the Web"][wolfram-launch]{: .related-reading} - [Nick Slater, "How SEO Is Gentrifying the Internet"](https://www.currentaffairs.org/2020/12/how-seo-is-gentrifying-the-internet){: .related-reading} - [Ed Z, "The Rot Economy"](https://ez.substack.com/p/the-rot-economy){: .related-reading} - [Google, "YouTube Misinformation - How YouTube Works"][fighting-misinformation]{: .related-reading} - [Google, "Our approach to Search"][howsearchworks-ourapproach]{: .related-reading} - [Google, "Responses"][howsearchworks-responses]{: .related-reading} - [Google, "How Google's featured snippets work"][FeaturedSnippets]{: .related-reading} [KamalaHarrisScoldsPeopleForEven]: https://breaking911.com/vp-kamala-harris-to-americans-who-cant-find-a-covid-test-google-it/ [AmazonsAlexaToldA10YearOldToElectrocute]: https://www.bleepingcomputer.com/news/technology/amazon-alexa-slammed-for-giving-lethal-challenge-to-10-year-old-girl/ [KillingBabies]: https://twitter.com/brimwats/status/1449749173130067974 [howsearchworks-ourapproach]: https://www.google.com/search/howsearchworks/our-approach/ [RecipeBlogProblem]: https://www.reddit.com/r/cookingforbeginners/comments/nyk719/do_food_bloggers_realize_how_awful_their_recipe/ [eff-amp]: https://www.eff.org/deeplinks/2020/07/googles-amp-canonical-web-and-importance-web-standards-0 [howsearchworks-responses]: https://www.google.com/search/howsearchworks/responses/ [bing-decisions]: https://news.microsoft.com/2009/05/28/microsofts-new-search-at-bing-com-helps-people-make-better-decisions/ [wolfram-launch]: https://blog.wolframalpha.com/2009/05/04/reactions-to-wolfram-alpha-from-around-the-web/#more-214 [FeaturedSnippets]: https://support.google.com/websearch/answer/9351707?hl=en [zelda-recipe]: https://www.theverge.com/tldr/2020/8/3/21352299/zelda-breath-of-the-wild-red-clothes-dye-traveler-gates-of-wisdom-john-boyne-google-search-results [SafiyaUmojaNobleAlgorithmsOfOppression]: https://nyupress.org/9781479837243/algorithms-of-oppression/ [fighting-misinformation]: https://www.youtube.com/howyoutubeworks/our-commitments/fighting-misinformation/   The Google search summary vs the actual page

— insomnia club (@soft) October 16, 2021