Recent tech trends have followed a pattern of being huge society-disrupting systems that people don’t actually want. Worse, it then turns out there’s some reason they’re not just useless, they’re actively harmful. While planned obsolescence means this applies to consumer products in general, the recent major tech fad hypes — cryptocurrency, “the metaverse”, artificial intelligence… — all seem to be comically expensive boondoggles that only really benefit the salesmen.

Monorail!

Monorail!

The most recent tech-fad-and-why-it’s-bad pairing seems to be AI and its energy use. This product-problem combo has hit the mainstream as an evocative illustration of waste, with headlines like Google AI Uses Enough Electricity In 1 Second To Charge 7 Electric Cars and ChatGPT requires 15 times more energy than a traditional web search.

It’s a narrative that’s very much in line with what a disillusioned tech consumer expects. There is a justified resentment boiling for big tech companies right now, and AI seems to slot in as another step in the wrong direction. The latest tech push isn’t just capital trying to control the world with a product people don’t want, it’s burning through the planet to do it.

But, when it comes to AI, is that actually the case?

What are the actual ramifications of the explosive growth of AI when it comes to power consumption? How much more expensive is it to run an AI model than to use the next-best method? Do we have the resources to switch to using AI on things we weren’t before, and is it responsible to use them for that? Is it worth it?

These are really worthwhile questions, and I don’t think the answers are as easy as “it’s enough like the last thing that we might as well hate it too.” There are proportional costs we have to weigh in order to make a well-grounded judgement, and after looking at them, I think the energy numbers are surprisingly good, compared to the discourse.

Part 1: Efficiency and Proportional Cost

Competing trends

“AI” is just a kind of program, so the “energy use of AI” is the energy used by the computers that are performing AI’s various processing tasks1. So, first, what are the big-picture trends in computational performance as it relates to electricity use?

At a high level, these metrics are all generally positively correlated:

- Power consumed

- Heat emitted

- Carbon emitted

- Water used

This is a positive correlation, meaning they all move together in the same direction. When one goes up, the rest go up. When one goes down, the rest go down. Using more power emits more heat, which takes more water to cool. Depending on the method, power generation in aggregate emits some amount of carbon, although green energy can push that number down. (Although all thermoelectric power generation uses water too, even green tech.)

It’s also generally good to push all these numbers down. Consuming fewer resources is good and emitting less carbon and waste heat is good. When we talk about reducing energy use, we’re pushing this whole bundle of things down, which is all good.

The exact value of those properties at any given moment varies a lot because two significant factors are both changing variables:

Performance demand (performance/time) for AI is obviously increasing, as companies are selling more AI-based services. Whether it’s real “market demand” or companies operating at a loss to promote their product, there’s obviously far more AI processing happening now than there was ten years ago.

But at the same time, energy efficiency (performance/watt) is actively increasing, driven by technical improvements. Overall, energy demands are still increasing, but the efficiency improvements are reducing the impact.

When it comes to overall energy use, those trends plug in something like this:

Demand is jobs/time, which is going up (probably).

Complexity (operations/job) varies, and is influenced by how complex the job is and whether specialized chips are being used or not.

Operation cost (energy/operation) is a chip-specific kind of power use, and going down as chips get more and more efficient.

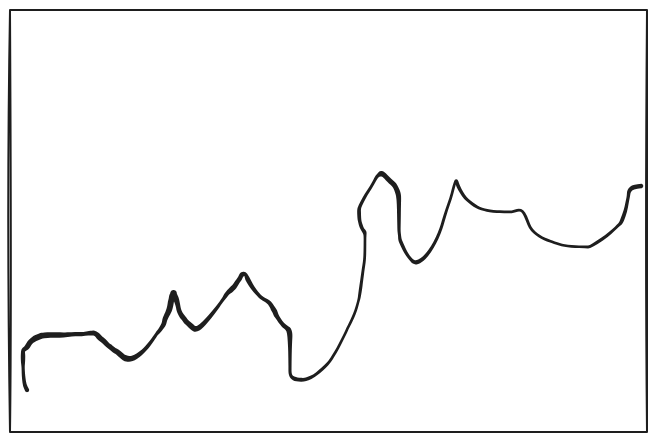

Put together, this makes the consumption trend “wobbly”:

Because these variable factors push the overall power usage in both directions, we don’t see consistently increasing or decreasing numbers. Instead, we see a wide range of variance, depending on how the other variables moved lately. Efficiency gains push overall energy use down, increased demand pushes it up, and so we would see a sort of “wobble” as opposed to a monotonic line. The overall trend is ultimately increasing due to increasing demand and complexity in the current “rollout” period, but that’s not a requirement of the technology or industry.

you know, increasing, but wobbly

you know, increasing, but wobbly

But even though their fluctation makes them hard to compare moment-to-moment, the rough numbers tell us the order of magnitude we’re working with, give us a starting point to think about the question, and let us estimate best/worst case power figures.

Units

For this article, I’m going to try to measure energy in kilowatt-hours (kWh) and watt-hours (Wh). One kilowatt-hour is the energy output of 1 kilowatt of power applied for 1 hour. (Energy (kWh) is power (kW) × time (h).) And the single watt-hour (Wh) is 1/1000 of a kWh. The other scale that comes up when talking about very large aggregates is the terrawatt-hour (TWh), which is one billion kWh.

To give you an idea of how much energy that really is here are some points of reference, in ascending order:

- My phone’s battery capacity is ~0.010 kWh (10.45 Wh). Yours is probably closer to 0.015 kWh (15 Wh). (Phones have to be really energy-efficient!)

- My laptop runs at 0.03 kW, or 0.03 kWh per hour.

- If I forget to turn my laptop off at night, that costs 0.57 kWh.

- Elevators run at 6kW, meaning they consume 0.1 kWh per minute of operation.

- Commercial GPUs can draw a maximum of 180 W to 350 W, which equals 0.18 kWh to 0.35 kWh per hour.

- PC gaming was estimated in a 2018 study to consume 75 TWh per year.

- Watching one hour of Netflix consumes 0.08 kWh to 0.8 kWh, depending on the scenario.

- Standard central air conditioning systems consume between 0.50 kWh and 3.75 kWh per hour.

- This summer, in my small Texas apartment, with my AC set on a timer to only ensure it’s comfortable when I’m at home and awake, my 300 W AC is cooling 45% of the time, which would be 3.24 kWh per day.

- The energy produced from burning 1 gallon of gasoline is approximately 36 kWh. (At 30 MPG, this is 0.84 miles per kWh.)

- The average U.S. household consumes about 10,500 kWh of electricity per year, or 28.8 kWh per day.

- 1 terawatt-hour (TWh) powers approximately 95,000 homes for one year.

- Running an electronic billboard for one day consumes ~450 kWh.

- Manufacturing an average (1,481 kg) vehicle consumes 17,200 kWh

Number examples

So that’s a rough outline of the question. We know the numbers are going to vary, but the data should still tell us about trends and scale. Here’s what it looks like broken down:

AI power requirements can be broken into two general categories: training and inference.

Training a model is the process of building it for the first time. This only has to be done once per-model, although models have to be retrained in order to add data.

Inference is using a model that already exists. Inference — using the AI, either for categorization2 tasks or generating new text/images — takes orders of magnitude less power per operation, although the cumulative power requirements of many operations at scale still add up.

Creating a new model or releasing a new version of an AI product revision of an AI product represents a training operation. Using an existing model to generate a chat response or a diffusion image is an inference operation.

Training

Training costs are proportional to the amount of data the model is trained on. The more data points there are, the longer the training takes. This means that it’s always possible to make training more expensive by throwing more data in. There’s no strict upper bound on how expensive training can get, but we can still estimate a range based on how people are actually doing training in practice.

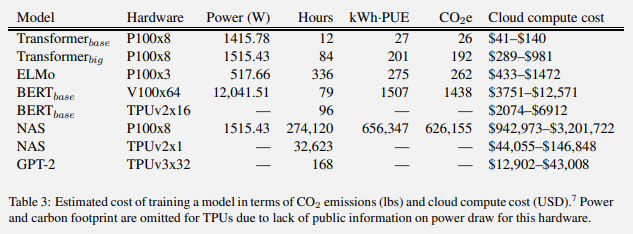

In 2019, the cost of training a language model on standard hardware ranged from 17 kWh to 174 kWh3:

In 2022, training costs for the BLOOMz model family of small LLMs were benchmarked, and the range for total training costs was 11,000 kWh to 51,586 kWh:

Luccioni, A. S., Jernite, Y., & Strubell, E. (2023). Power Hungry Processing: Watts Driving the Cost of AI Deployment? (Truncated) Table 5: The BLOOMz models from our study with their training energy cost (from [Luccioni]), finetuning energy cost (from [Muennighoff]) …

BLOOMz-7B BLOOMz-3B BLOOMz-1B BLOOMz-560M Training energy (kWh) 51,686 25,634 17,052 10,505 Finetuning energy (kWh) 7,571 3,242 1,081 543

But it is possible to pour massive amounts of energy into training language models. The more data you use as input, the more work is required to train a satisfactory model. In more recent history, Meta’s Llama 3 large language model’s small and large sizes were trained in 2024 for 1.3 and 6.4 million 700-watt GPU hours, respectively, with a maximum theoretical power usage of 910,000 kWh and 4,480,000 kWh.

Generative models go through the same process of training. The Stable Diffusion v2 model was trained on A100 PCIe 40 GB cards running for a combined 200,000 hours, which is a specialized AI GPU that can pull a maximum of 300 W. 300 W for 200,000 hours gives a total energy consumption of 60,000 kWh. This is a high bound that assumes full usage of every chip for the entire period; SD2’s own carbon emission report indicates it likely used significantly less power than this, and other research has shown it can be done for less.

So the energy consumption of model training is somewhere in the range of 11,000 kWh to 52,000 kWh for moderate-sized LLMs, 40,000 kWh to 60,000 kWh for more expensive image generators, and up to 5,000,000 kWh for global-scale LLMs like Llama and ChatGPT.

Inference

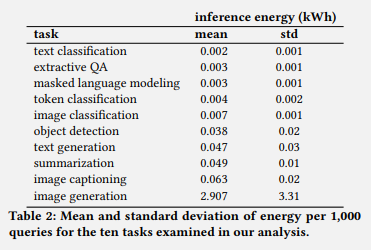

An inference operation (using an AI model that’s already been trained) takes energy proportional to the model’s complexity. Again, this is intuitive: if the dictionary is larger, it takes more time to search. A response from a model trained on vast amounts of data takes more processing time than a simple one trained on a minimal set. A chatbot that returns answers in complex language takes more energy than a tool limited to yes/no answers. A large image takes more energy to generate than a small one. In other words, we expect the computation power required will be proportional to both the domain and range of the function.

The trend is that categorization2 and recognition operations are less expensive than generation operations (0.002 vs. 0.007 Wh).

LLM-based text processing is slightly more expensive. Other research finds that a ChatGPT text generation operation takes 2.9Wh to 4.0 Wh, and 1000 messages would take 2.9 kWh to 4 kWh.

Google’s published estimates indicate that 3 Wh is also the energy cost of each interaction with their LLMs.

But the costs of image generation are higher. Luccioni et al. (in the table above) found that among generation operations, text generation is less expensive than image generation (0.047 vs. 2.9 Wh). The most popular generative image model that can be run on consumer GPUs is Stable Diffusion. Benchmarking with codecarbon, with 20 samples, Stable Diffusion 1.5 takes ~0.09 Wh per image. Images from diffusion models can reach a cost of 3 Wh/image as a high upper-bound, but reaching this requires a larger model, and 100+ iteration steps as opposed to the standard 20—30.

Power Hungry Processing: Watts Driving the Cost of AI Deployment?

Figure 1: The tasks examined in our study and the average quantity of carbon emissions they produced (in g of CO2eq) for 1,000 queries. N.B. The y axis is in logarithmic scale.

We can also estimate the energy costs of modern black-box services that don’t release this data by using what we do know. Midjourney runs in real time, and takes 30–60 seconds to generate a batch of four images. As a high bound, if that process is running on a top-of-the-line GPU running at a full 450W for the full 60 seconds, that represents power consumption of 7.5 Wh per image set, and 1.88 Wh per subimage.

So the energy consumption of an inference operation is somewhere in the range of 1.0 Wh to 7.0 Wh for standard operations, and to 4 Wh to 7.5 Wh for more expensive jobs like image or video generation.

7.5 Wh per shot is expensive for a single computer operation. That takes us out of the realm of normal high-performance processing like arithmetic and into the realm of things like visual effects or 3D rendering. But that makes sense when you remember that the work being done is actually visual effects and 3D rendering. Relatively complex work, relatively high cost.

Training dwarfs inference

You probably spotted that an training operation is much, much more expensive than using the model once it exists. Training — categorically — consumes orders of magnitude more energy than inference. This is intuitive: generating an index (like a dictionary) in the first place is expensive, but the purpose of the index is to make many future lookups quick and easy.

Here is the full table for the BLOOMz model families, including the interesting “cost parity” calculation:

Luccioni, A. S., Jernite, Y., & Strubell, E. (2023). Power Hungry Processing: Watts Driving the Cost of AI Deployment? Table 5: The BLOOMz models from our study with their training energy cost (from [Luccioni]), finetuning energy cost (from [Muennighoff]), inference cost (from the present study), and cost parity, as the number of inferences required to sum to the training cost.

BLOOMz-7B BLOOMz-3B BLOOMz-1B BLOOMz-560M Training energy (kWh) 51,686 25,634 17,052 10,505 Finetuning energy (kWh) 7,571 3,242 1,081 543 Inference energy (kWh) 1.0 × 10⁻⁴ 7.3 × 10⁻⁵ 6.2 × 10⁻⁵ 5.4 × 10⁻⁵ Cost parity (# inferences) 592,570,000 395,602,740 292,467,741 204,592,592

The amount of energy used by an inference operation is hundreds of millions of times smaller than the energy used to train its model.

Although inference costs are very small, they depend on having a trained model, which could be a hidden cost. Additionally, while it’s possible to measure and estimate the power usage of inference operations, it’s possible to refuse to disclose energy use statistics for training, which is a more blatant way to hide training costs.

Data centers

Most AI processing, whether training or inference, is done at scale in dedicated data centers. This uses a considerable amount of energy: data centers are already significant energy consumers, and increased load from AI work only exacerbates that.

If you’re not familiar, data centers are dedicated facilities for running servers. Data centers are “the cloud”: instead of running your own servers, you can rent computer power from experts who are very good at keeping computers from turning off. For the last decade, it’s been true that dedicated service — whether it’s a cloud service, chat program, or just any website — is probably running in a data center.

Data centers are what make all the good stuff go.

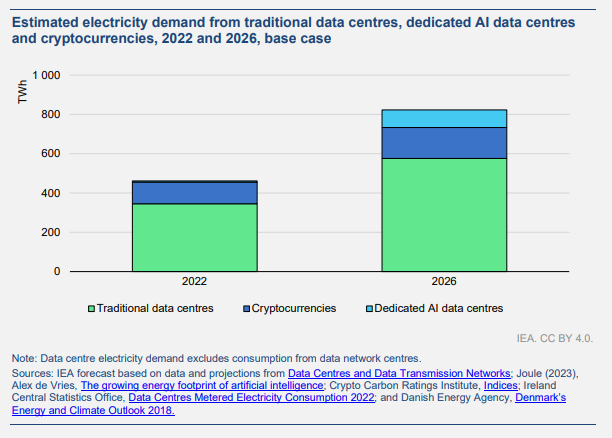

While different kinds of energy use aren’t individually tracked, for the purposes of analytics, “data center” is a discretely tracked category of power consumer. And data center energy use (combined) has been continually rising. From Bloomberg:

AI Is Already Wreaking Havoc On Global Power Systems | Bloomberg Altogether, data centers use more electricity than most countries

Only 16 nations, including the US and China, consume more

Sources: Bloomberg analysis of BloombergNEF and DC Byte data

Note: Data center energy consumption through Q1 2024. National energy consumption levels are actual through 2022 and projected for 2023 and 2024.

Data centers used 200 to 500 TWh in 2018, or 0.55 billion kWh per day. By 2024, that number increased to 350 TWh, or 0.95 billion kWh/day. As their graph points out, both of those numbers are higher than the total use of some countries.

AI represents a minority of data center power use

This is a key point that trips people up: not all data center processing is AI. Data centers are already responsible for almost all cloud processing and web services. AI power usage is a tiny (but growing) minority of the consumptive demand.

So how much of the total power data centers consume does the AI subset actually use?

AI has not significantly inflated the demand… yet. As Kyle Orland points out for Ars, you can actually see on the Bloomberg chart that the AI excitement that Dall-E sparked in 2022 doesn’t seem to have accelerated power growth:

Kyle Orland, Taking a closer look at AI’s supposed energy apocalypse | Ars Technica Bloomberg asks one source directly “why data centers were suddenly sucking up so much power” and gets back a blunt answer: “It’s AI… It’s 10 to 15 times the amount of electricity.”

Unfortunately for Bloomberg, that quote is followed almost immediately by a chart that heavily undercuts the AI alarmism.

The massive growth in data center power usage mostly predates the current mania for generative AI (red 2022 line added by Ars).

That chart shows worldwide data center energy usage growing at a remarkably steady pace from about 100 TWh in 2012 to around 350 TWh in 2024. The vast majority of that energy usage growth came before 2022, when the launch of tools like Dall-E and ChatGPT largely set off the industry’s current mania for generative AI. If you squint at Bloomberg’s graph, you can almost see the growth in energy usage slowing down a bit since that momentous year for generative AI.

So, within data center power use, we don’t see AI having caused a spike in power demand. But it probably will:

While well-informed, these are still estimations. Real data about the inner workings of data centers (as opposed to estimations) is proprietary information, when it exists at all. Since this includes data on how much power is allotted to AI, estimating the real amount of AI demand is tricky.

One data source that is available, though, is chip sales. In The growing energy footprint of artificial intelligence, Alex de Vries estimates a theoretical upper bound for AI-specific data center use from chip sale data. The maximum power usage would be for every AI-specific chip to be running at once drawing as much power as it could, so working backward, you can estimate the maximum amount of demand:

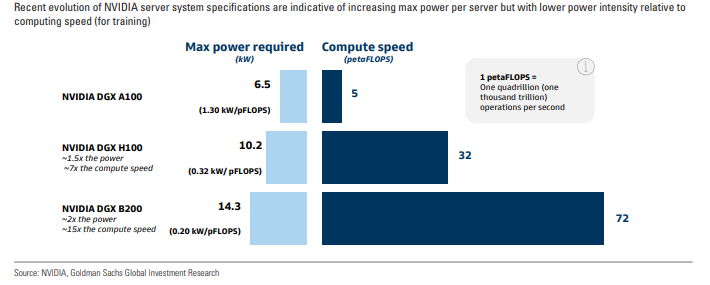

de Vries, A. (2023). The growing energy footprint of artificial intelligence. Joule, 7(10), 2191–2194. https://doi.org/10.1016/j.joule.2023.09.004 Given its estimated 95% market share in 2023, NVIDIA leads the AI servers market. The company is expected to deliver 100,000 of its AI servers in 2023. If operating at full capacity (i.e., 6.5 kW for NVIDIA’s DGX A100 servers and 10.2 kW for DGX H100 servers), these servers would have a combined power demand of 650–1,020 MW. On an annual basis, these servers could consume up to 5.7–8.9 TWh of electricity. Compared to the historical estimated annual electricity consumption of data centers, which was 205 TWh, this is almost negligible.

So, regardless of the work being done, those new servers can consume a maximum of ~9 TWh/year, because that’s how much hardware exists. These numbers are in-line with a study from Goldman Sachs which estimates the total current power demand to be ~15 TWh/year, including demand from older and non-specialized chips.

So, for the power use of AI in data centers overall, we see an approximate current annual usage of ~9 to ~15 TWh, or 4% of total data center power consumption.

Data centers are high-capacity

Not all processing done in data centers is AI, but not all AI processing is done in data centers as part of that ~15 TWh. Most of the expense is, though.

All significant training is done in data centers. Depending on the model, inference operations either run in data centers (“in the cloud”), or locally (“on-chip” AI). Data centers can run much more powerful computing operations than consumer hardware, so they can run operations that are too computationally expensive to run locally. That computational expense also means those jobs require more power, which data centers have a greater capacity to support.

This divides work into two categories. Work that requires4 a data center is on a completely different scale of power usage than any software that can run locally with reasonable performance. Any operation that can run locally on your computer can only take as much power as the computer does. As a rule of thumb — compared to those TWh numbers — this is usually small enough that can almost be discounted as a significant power consumption factor, compared to specialized operations that require the power capacity of a dedicated server farm. 15 minutes of local AI use will never take more energy than 1 hour of Fortnite on the same computer; the hardware can only use so much power.

Hyperscaling costs… down?

There are two5 major categories of data centers. Dedicated mid-tier and “hyperscale” data centers are facilities designed to be full-time data centers. But many servers aren’t run in these kinds of dedicated facilities, and are instead run ad-hoc, in a dedicated room or floor in an existing facility. This is the “server closet”.

Dedicated data centers use significantly more energy than server closets, in total. But professional design, architecture, and improved cooling systems mean these dedicated facilities are far more efficient than small server closets. Because dedicated data center facilities are designed from the ground-up to be efficient data centers, they use every trick in the book to do it well.

What makes hyperscale data centers energy-intensive is the scale part: the cumulative cost of all the servers. If that same work was simply done in a dedicated data center instead, and nothing else happened, the total energy use would go down!

So, when it comes to comparing costs, looking at total consumption is often a misleading train of thought. There’s an interesting lesson about comparing cost and worth here, which is that it’s necessary to think in terms of proportions, not in net. We must think in terms of efficiency: energy cost is only meaningful when we tie the net change in energy to the value delivered. (Foreshadowing!)

Proportional Comparison

So, given an idea of the scale of energy required for those different jobs, “how much is that”?

This is a nontrivial question. The negligent way is to just insert the numbers from AI into that “points of reference” list from before and call that Content. But to just compare these numbers directly and end there is to fall into the proportionality trap. We can’t just look at the net totals, we have to consider what value is actually being produced by the expense or else we haven’t actually compared the two things meaningfully at all.

What we should care about is proportional cost, meaning the net cost divided by the value produced, or “kWh spent per utility produced.”

(This is the inverse of efficiency, which is value/cost.)

When thinking about the energy consumption of AI, the question on the table is “is spending energy this way a waste, or is it more efficient, or is it almost the same?”

This is the task of weighing costs against the benefits they provide, rather than net values:

Mon Jun 24 17:43:11 +0000 2024When it comes to power usage, I don’t understand the “air conditioning number more bigger“ talking point. You have to weight costs against the benefits they provide. Air conditioning ranges from human comfort to inhabitability. Some of the most important goods that exist.

I have seen some work that does address this question, like 'depleting the global power and water supply' – @txttletale on Tumblr, and more formally Milja Moss, “LLM Water And Energy Use”:

Milja Moss, “LLM Water And Energy Use” When determining whether you should be using services like ChatGPT, the question then becomes: “Are 1600 responses from ChatGPT as useful to me as 2 hours of video games?”

I think this is a deeply important train of thought that’s generally overlooked, even though it seems to be the crux of the question. This is a mistake that happens when you directly apply the conclusions from research done on net impact to ideas of individual use, when there’s actually proportional conversion and reassessment that needs to be done.

For my pseudo-formula I’m borrowing the word “utility” here from utilitarian philosophy. If you’re not familiar, don’t let the dryness of the word scare you: utility includes everything good, including reducing human suffering. It is “that property in any object, whereby it tends to produce benefit, advantage, pleasure, good, or happiness … [or] to prevent the happening of mischief, pain, evil, or unhappiness to the party whose interest is considered.” In other words, goodness.

Also, even though I put utility in a formula for illustrative purposes, I don’t want to fall down the rabbit hole of quantifying utility into “points”, or something. All we need for now is to understand it as a subjective idea. This is how you sidestep the trap of quantifying “value” in dollars, which fails to capture huge categories of things that are good and ought to exist. We can make meaningful value judgements without quantifying it.

The utility value of AI is highly contested

The problem we immediately run into if we try to think about the proportional cost of AI is that there is no consensus on whether it’s ultimately useful. This matters because if it’s not useful, all energy spent on it is automatically wasted, so that’s our answer right there.

This is a deep topic, and one I plan to talk about in depth in another article (which I have a lot of notes for already). But, if you’re not familiar, here is a quick summary of the discourse.

I think it’s self-evident that AI services can be useful. If an AI service can answer a question correctly, that provides value. If one can generate usable text, or a usable graphic, that provides value. And, usually, this can all be done by AI much faster than doing it with human labor. By virtue of being a tool that sometimes works, the utility provided has to be something above zero.

I am not the first person to land on this position. Quoting from a few thoughtful people:

Kyle Orland, “Taking a closer look at AI’s supposed energy apocalypse” | Ars Technica If you cherry-pick the worst examples of AI screwups, it’s easy to see the entire sector as a misguided use of limited money and energy resources. But if you’re a programmer who’s getting twice as much done with AI coding tools or a customer service manager seeing productivity gains from employees who can consult with AI, that may seem like electricity well spent.

Nicholas Carlini, “How I use “AI”“ …the reason I think that the recent advances we’ve made aren’t just hype is that, over the past year, I have spent at least a few hours every week interacting with various large language models, and have been consistently impressed by their ability to solve increasingly difficult tasks I give them. And as a result of this, I would say I’m at least 50% faster at writing code for both my research projects and my side projects as a result of these models.

…

You might think—why would someone write an entire article justifying that language models are useful??! Isn’t that obvious?!? But there seem to be a (large?) contingent of people out there—in the academic literature, in the software engineering space, and also in the media sphere—who proclaim widely that LLMs contribute nothing, are just another hype cycle, and in a few years will die having had no impact on the world. I will be arguing these people are wrong because current LLMs are already useful.

So that’s the position to beat. But there are a number of arguments to do this:

There are moral arguments that AI is inherently unethical, not because of its efficiency, but due to problems with training methods, data sources, and intellectual property violations.

There are utilitarian arguments that AI-generated artifacts are generally harmful to public life, because they pollute the internet with well-formatted-but-wrong documents, making it harder for everyone to learn true things across the board.

There are labor arguments that AI services will allow companies to replace whole categories of human jobs with Capital they can own outright, allowing the owners of the new means of production to take a much larger share of revenue as pure profit, enriching themselves while workers go unpaid. What makes this particularly dangerous is that, from the perspective of the corporations, this might be the most efficient thing to do even if the resulting work is worse than peoples’.

For the sake of the energy question, let’s put a pin in the ethical question, since that’s really a separate topic. “Is using AI an unethical waste of electricity” is the only place these topics overlap, and whether it’s a waste of electricity or not is already the question we’re trying to answer. Otherwise, the ethical question is on a totally separate dimension.

If AI usage is inherently evil or necessary to oppose as part of a labor struggle, we don’t really care about the energy question because the answer is AI mustn’t be used no matter what. If it’s unethical it doesn’t matter how efficient it is, it shouldn’t be used at all. Energy isn’t really a factor in that case.

So for this article about energy, let’s make the middle-of-the-road assumption that AI services are useful and valuable, but not magically so. Let’s just conceptualize AI as a new tool that can be used productively, even if we’re not convinced the energy cost is low enough to make it worth using. And, at least in some cases, AI generative software can produce outputs comparable to human work in a practical sense. (Obviously this has a massive social and economic impact, but for now let’s just use it to do energy comparisons.)

So if AI is at least somewhat useful, and we know the rough ranges for the energy costs, how does that proportional cost compare to other things?

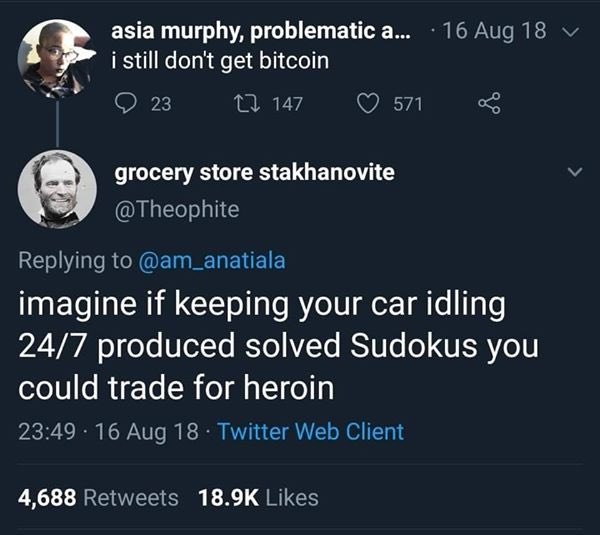

Cryptocurrency

As I mentioned earlier, the AI boom feels a lot like the blockchain cryptocurrency push of a few years ago. Like cryptocurrency, AI is a tech fad, it requires data centers, it consumes more energy than a webserver… the comparison is extremely natural.

In fact, naturally seguing from cryptocurrency to AI is what Alex de Vries (the expert I’ve already cited) did:

Alex de Vries is a PhD candidate at the VU Amsterdam School of Business and Economics and the founder of Digiconomist, a research company dedicated to exposing the unintended consequences of digital trends. His research focuses on the environmental impact of emerging technologies and has played a major role in the global discussion regarding the sustainability of blockchain technology.

So people are coming off cryptocurrency and applying that same energy to AI, and I think it makes complete sense to do that. But, while the two trends look similar from a distance, they’re really very different, for two major reasons.

Scale of energy demand

Blockchain technology is designed to be energy intensive, as prohibitive expense is actually what creates the scarcity and therefore the value. In contrast, AI tech is highly motivated to increase efficiency as much as possible. (Since, y’know, it’s a real service that does things.)

Cryptocurrency is also equally energy intensive at all times. In contrast, the major cost of AI development is the front-loaded training stage, and subsequent inference is significantly less expensive.

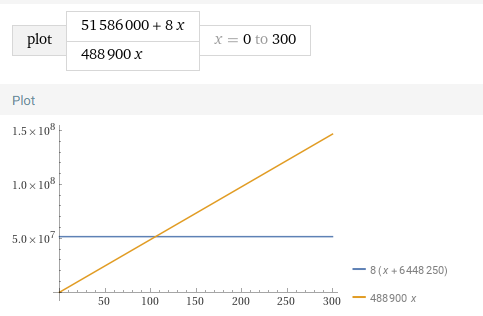

In practice, that means crypto outspends AI enormously. The “transactional cost” of using AI is less than cryptocurrency transactions by many orders of magnitude, which is to be expected. At time of writing, processing a single bitcoin transaction consumes 488.90 kWh, and increasing block difficulty means that number will increase over time.

For comparison, if 51,586 kWH is the high end of the range for the cost of training a model, that’s just under 106 bitcoin transactions. Except training only has to be done once, and according to its own metrics for success, bitcoin will continue to make transactions forever. So, after just a few transactions, bitcoin’s ongoing cost surpasses the one-time training cost of the AI model, and keeps increasing indefinitely:

(And this model is actually far too generous to bitcoin, whose transactional costs don’t stay fixed at 488.90 kWh, but intentionally increase over time!)

For our proportional cost, utility/expense, that’s the numerator. AI’s expense is significant, but minuscule compared to blockchain’s. So that’s a clear victory for AI on the net cost.

Productive output

So, how useful is blockchain tech? Is it turning that huge amount of energy it consumes into real value?

The payment processing monopolies sit comfortably undisrupted while “crypto” devolves into a way to gull marks and commit security fraud. NFTs have not somehow made scarcity a good thing, and buying lots in a company town has not redistributed power back to the people.

Since blockchain is almost worthless, the proportional energy cost of AI is much, much lower than cryptocurrency. As the energy costs for blockchain calculations go up, and the value goes toward zero as the rugs are pulled, AI becomes infinitely better.

So maybe that was a lowball. But I still see the cryptocurrency/AI energy comparison being made all the time, and it doesn’t make sense. They’re both ways computers can use electricity, but other than that they’re in completely different leagues.

Image generation

A much closer race is AI image generation. I think this is a much better exercise, since image generators were the most expensive models to train and use.

Is using ~3 Wh to 7.5 Wh to AI-generate an image a waste of energy compared to just doing the real work yourself?

Photography

First, if you want a photograph, the answer is automatically no. If you need to go out and do a professional photoshoot, transportation alone is going to consume more (and dirtier!) energy than an image generator. At ~30 miles per gallon, a 15-mile round trip consumes the equivalent of 18 kWh, which is enough to generate 2,400 Midjourney images at 7.5 Wh a pop. And that’s not even considering the costs of running a camera and lighting. A $15 ring light runs on 10 W. That’s tiny, but leave it on for just 45 minutes and that’s another Midjourney image.

Even if you’re a stock photography company and you’re factory-farming photographs, you can’t be more energy efficient than image generators. If you minimized your energy costs by making one trip in one vehicle and taking photographs with that one ring light for 8 straight hours, spending just 18.1 kWh, you’d have to do nothing all day but take 5 photos per minute just to break even with Midjourney. And god forbid, if you heat your lunch in a 700 W microwave, that’s another Midjourney image every 38 seconds.

And, remember, Midjourney is the most expensive option on the list! There are alternatives that spend even less energy than even the cheapest photoshoot. It’s no contest.

Illustration

Other forms of art — like illustrations — are harder to compare, just because it’s inherently difficult to compare any two categories of art. It’s a hard question to compare any two artists, regardless of whether one of them is using AI or not. So the case where AI-generated art can realistically substitute for traditional illustration work is going to be less common. Whether an AI product is comparable depends on too many factors, like the subject matter, the desired style, and the skill of the prompter.

Say you’re a fiction writer, but not an artist, and you want a few illustrations to put in your novel in order to better communicate your story. Does using an image generator consume more power than commissioning an artist to make a digital illustration?

I don’t have a Photoshop license, so I can’t verify an exact benchmark myself, but let’s roughly estimate illustration software as using something in the range of 30 W (my laptop idling with no graphics programs open) to 300 W (medium-spec GPU running at 100%), depending on how powerful it is.

We’ve already estimated AI image generation as taking 3 Wh to 7.5 Wh per image, because the amount of time taken is predictable. How many Wh a traditional digital illustration takes will be 30 W to 350 W multiplied by how long the software is running, in hours.

In order for 30 W software to use less than 7.5 Wh, it will need to finish running in less than 15 minutes. If the software uses the full 300 W, in order to use less than 7.5 Wh, it has to finish in 1 minute, 30 seconds.

But in real life, a commissioned illustration takes at least 2–3 hours. That doesn’t make the AI generator the right choice, but it does mean it’s not a waste of energy.

Fri Jul 19 09:57:19 +0000 2024EXTREME napkin math so take with a grain of salt, but from what i'm researching w/ benchmarks, an hour of continuous stable diffusion use is worth basically, to be generous, 3-4 hours of another typical art program in terms of energy load

Fri Jul 19 09:58:17 +0000 2024so basically if you spend one hour of GPU time making an image in stable diffusion, as long as it would've taken you more than 2 hours of photoshop, 3-4 hours of CSP/Krita, or 2 hours of blender, using stable diffusion actually uses *less* energy. AGAIN, NAPKIN MATH

Fri Jul 19 09:59:04 +0000 2024i think a more realistic conclusion to draw is, basically, most of these programs are roughly comparable in terms of order of magnitude of energy draw - so if you have saved time by using ai generation, you've likely saved energy as well

Fri Jul 19 10:00:00 +0000 2024oh and if you're streaming while you draw you are absolutely, definitely using more power than stable diffusion alone, streaming is a huge energy and gpu load hog

In other words, Luccioni’s proportional cost calculation makes sense:

Power Hungry Processing: Watts Driving the Cost of AI Deployment? Or take AI image generation, where one estimate suggests creating 1,000 generative AI images takes as much energy as driving about four miles in a car. If those images are as good or better than the ones you’d get more slowly from a human artist, the economic case for that energy usage is obvious

Image training

But so far this has only been considering the transactional cost. There’s also the cost of building the model in the first place, which is a prerequisite for all these fast, cheap operations it enables. Is that one-time training cost high enough that it negates the benefits?

Let’s switch from Midjourney to Stable Diffusion, since we know both the inference cost (3 Wh/image) and training cost (~50,000 kWh).

Training an AI model produces a tool that can be used to reduce the effort involved in later work. Structurally, this model of a preparation phase before a tool is usable is parallel to how all tools work, and in this case particularly mirrors factory machinery. There’s a significant upfront cost, but that produces capital that can be used to make future operations faster and cheaper.

So if an AI is like equipment, how expensive is the equipment?

For image generators, we found that the training expense currently falls in the 40,000 kWh to 60,000 kWh range.

The 2020 study Sato, F. E. K., & Nakata, T. (2020). Energy Consumption Analysis for Vehicle Production through a Material Flow Approach found that manufacturing an average (1,481 kg) vehicle consumes 17,200 kWh. What’s interesting about this study is they determined that the energy required to manufacture a vehicle (including energy costs associated with acquiring material) scaled proportionally with material mass, and modern manufacturing methods produce vehicles at 11.61 kWh/kg.

Just for fun, let’s imagine an image generator as physical machinery produced at the same cost. That would be a 3,500 kg to 5,200 kg piece of equipment, or 7,600 to 11,400 lb. That’s less than half the weight of one empty dump truck, which is a pretty reasonable thing to produce one of, if it makes work that much more efficient.

But we don’t actually have to consider the training stage as a separate cost at all. If we know how many images are being generated, we can spread the training costs across the individual inferences.

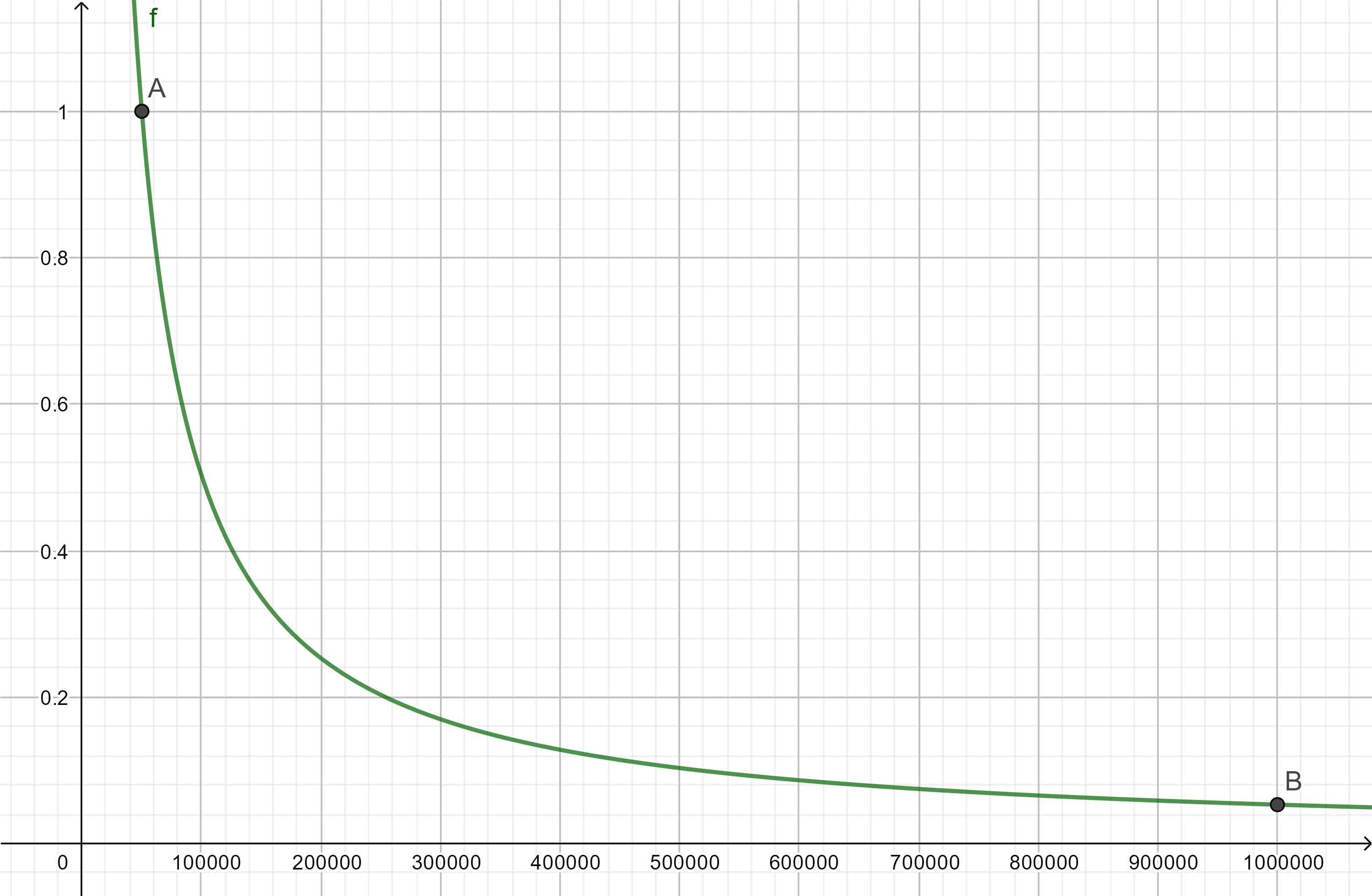

And if you’re not sure what one of the numbers, you draw a graph. To find the cost in kWh/image, this gives us

— where y is the bundled cost per image, 0.003 is the inference cost, and x is the total number of images ever generated, so each image is bundled with one share of the training costs. This creates this hyperbola:

As more images are generated in total, the cost per image is decreased, because the training cost is spread across more uses.

At point A, after 50,151 images, the cost comes down to 1 kWh/image. At point B, after 1,000,000 images, the cost lowers to 0.05 kWh/image (50 Wh).

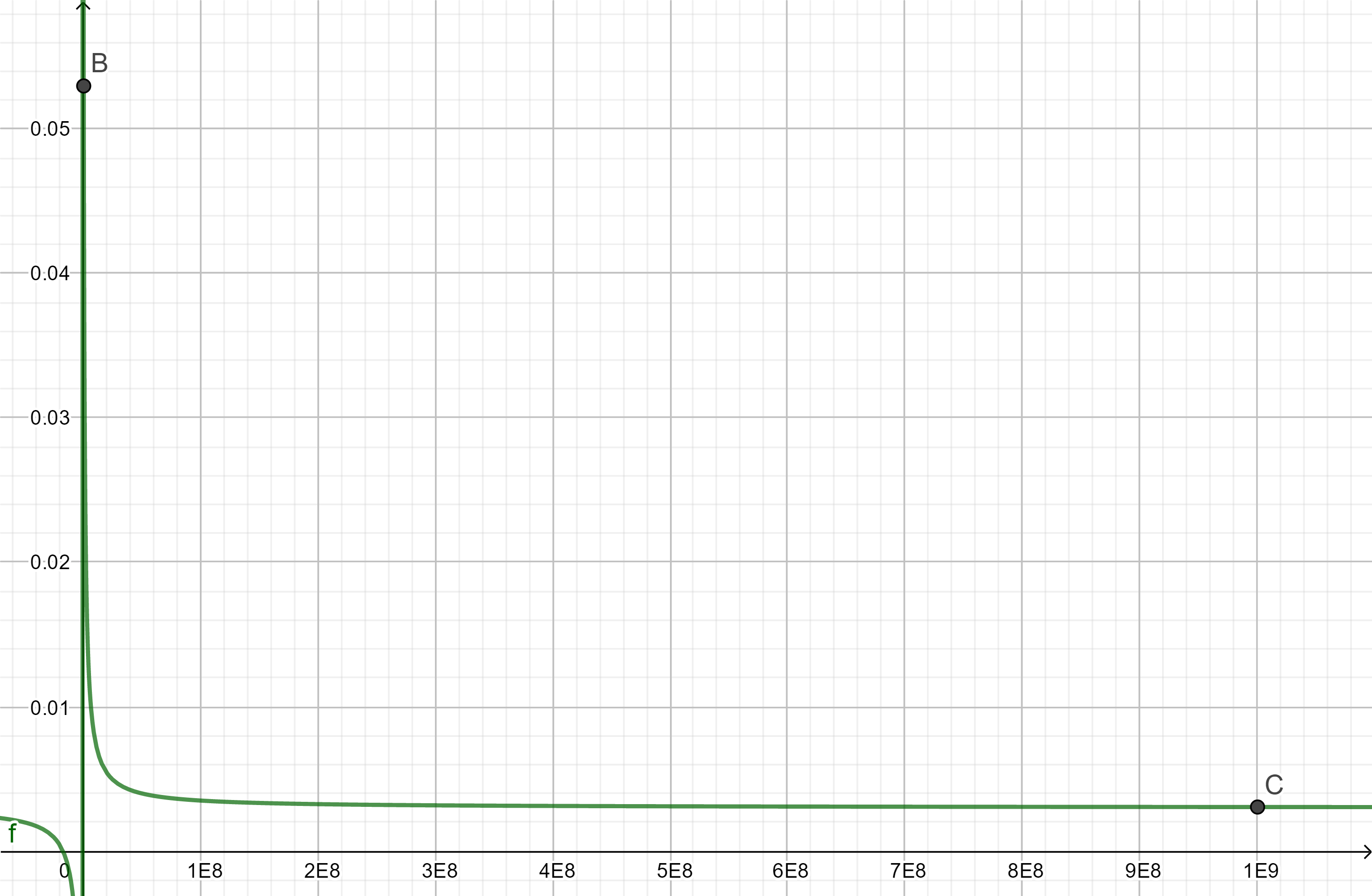

But 1,000,000 is still an absurdly low number. One single service, NovelAI, reports generating 4,800,000 diffusion images per day. The Stable Diffusion 1.5 model was downloaded 5,420,000 times from one site just in the last month, which likely represents hundreds of millions of image generations.

Given that, I’m reasonably confident in saying at least 1,000,000,000 Stable Diffusion images have been generated so far. That puts us at least past point C.

With 1,000,000,000 stable diffusions behind us, the cost per image is 0.00305 kWh, or 3.05 Wh. 3 Wh/image was already our assumed cost per image, and the cost of training the model initially has disappeared into the margin of error. In fact, this has already happened a long time ago; with just 100,000,000 (1E8) images total, the cost per image is 3.1 Wh, and the line already looks almost flat.

So, while image generation is among the more expensive AI tasks, it’s not true that it’s automatically wasteful. Compared to the alternatives, AI art is extremely efficient… provided you’re making work of value, and not just waste.

Research

We can apply this same logic to any task. First, consider the separate preparation/usage phases, then identify a comparable unit of work for each, then see how the energy usage requirements compare.

A LLM response takes 10-15x more energy than a Google search, sure. But does using a tool like ChatGPT as a research assistant consume more energy than searching for as long as it takes to get the same information? An LLM response just has to save you 10 clicks to break even, so as long as you’re really using it and not just asking something you could easily search yourself, it’s worth it.

This is something I’ve found makes ChatGPT genuinely useful: its ability to be a general conceptual index. If there’s a topic I’m not familiar with, I can describe the kind of thing I’m talking about in general terms, and ChatGPT can give me an outline of the topic to use as a launching point. To do that research with Google, I’d at least need to know what keywords to start with, which you often don’t.

For instance, here’s a question I asked back in 2023 (I don’t use ChatGPT much):

Some chocolate from a candy bar melts, but then rehardens in the refrigerator. Part of the chocolate separates into a tasteless white substance. What is the white substance?

Here I’m actually able to describe a phenomenon and work backwards to the terminology needed to do more robust research on the topic. (It was fat bloom.) With ChatGPT, I can ask a real question, get an approximate answer back that gets me on the right track, and refine from there with external research.

This ask-check-iterate loop seems extremely valuable when compared with traditional research. In order to get this information you’d normally need to be a subject-matter expert yourself or have access to one. Alternatively, with something like Wikipedia, you could find a general topic and try to browse through lists of subtopics; if what you were looking for was named clearly, you might be able to find it from there. But LLMs are able to replace that task of searching through the whole space with something much closer to an automatic, indexed lookup.

Turning a task from a search a human has to manually perform into a one-shot lookup is an incredible advancement. This enables people to do work that would otherwise take many man-hours of research and consultation to accomplish without the LLM tools. As for energy-efficiency, training and inference costs for LLMs are both less than the energy costs of image generators, and we’ve already seen how that went.

Entertainment

Taking a step back from industrial comparisons, AI use even seems fine when compared to entertainment. Thinking about how whether a use “pays for itself” is good, but using it just for fun is fine too.

As I mentioned earlier, a computer can only draw so much power.

Watching one hour of Netflix takes around 0.08 kWh to 0.8 kWh. Let’s take the conservative assumption that in your scenario, watching an hour of Netflix costs 0.08 kWh. If you instead used that hour talking with an LLM like ChatGPT for no purpose other than entertainment, you could generate a reply every two minutes and still use less power than streaming. (At 0.8 kWh, you can message every 14 seconds!)

Sitting down and doing nothing but generate Stable Diffusion nonstop for an hour with a 200 W GPU takes the same 200 Wh that playing any video game on high settings would.

Any normal consumer AI use is going to be equivalent to these medium-energy entertainment tasks that we basically consider to be free. I’ve turned on a streaming service just to have something playing in the background, and probably so have you. AI just doesn’t use enough power to be more wasteful than just normal tech use.

Software

(Nicholas Carlini, “How I use “AI”“ covers this topic in great depth, and I recommend it if this train of thought interests you.)

I was planning on writing about how the preparation/usage model was structurally similar to software development, with a clear software development stage followed by multiple usages, all of which consume energy. But that’s a challenging comparison to make, since there’s so much variance in the domain of “what software can do”. Worse, I’m not aware of any real data around either power requirements of enterprise software development, nor is it clear to me how much real work these “AI assist” tools do. In other words, quoting Wikimedia’s Chris Albon,

Sat Jul 20 15:00:12 +0000 2024I have a hard time describing the real value of consumer AI because it’s less some grand thing around AI agents or anything and more AI saving humans a hour of work on some random task, millions of times a day.

I just experienced this the other day. I needed to quickly automate some clicking but I didn’t remember the exact javascript semantics and didn’t want to context-switch to reading the documentation, so I just asked

In the javascript development console, I have a NodeList returned from a query selector. Write a console snippet that calls .click() on each item in the list, but with a one-second delay between each call.

and got back exactly what I needed:

You can achieve this by using

setTimeoutwithin a loop to introduce a delay between each.click()call. Here’s a snippet that does that:

const nodeList = document.querySelectorAll('your-selector'); // Replace with your actual selector nodeList.forEach((node, index) => { setTimeout(() => { node.click(); }, index * 1000); // 1000ms = 1 second });This code will call

.click()on each item in theNodeListwith a 1-second delay between each call. Theindex * 1000ensures that the delay increases for each subsequent item.

This saved me a few minutes and eliminated the overhead involved in thinking through a new development problem. And yes, it probably replaced 10-page clicks too.

But, for something larger and less incidental, I was reminded of a conversation I saw. The AI service Claude is, apparently, able to build simple software tools and user interfaces just from conversational description.

That’s really cool. But that’s also a much better basis for a cost/benefit comparison.

None of the tools in that conversation thread are particularly impressive by themselves. A good text editor already has a feature to diff text, good composition software already tells you your document’s character/word/paragraph count, and a good image editor has a color-picker as a basic feature. Still, it’s useful to be able to do any of these things quickly from a browser if you don’t have the relevant program open, as evidenced by the fact that many mini websites like this exist.

Let’s take the color picker example. With access to the internet, I could probably put together something serviceable in an hour. If Claude can spend power to do that work for me, based on what I’ve seen so far, I would expect whatever additional energy cost using the AI tool involved (if any!) to be made up for in the time and energy it saved me.

But the value of Claude here isn’t that it would save me an hour, it’s that it would enable someone without as much development experience to make a tool that might otherwise take them days.

That’s a significant factor, but not one that slots cleanly in to our proportional cost calculation. That’s a benefit that’s hiding under “utility”.

Unfortunately, in the same way, there are also hidden costs. We also need to consider the impacts and implications this energy use implies, and there are significant problems under that rug. Because there are energy problems with AI; they’re just not that every time you use chatgpt they have to burn a barrel of coal. That, we can rule out.

That leads us into Part 2.

Related Reading

-

Like text recognition, or recognizing subject matter in pictures. ↩↩

-

I’m not including the models that were designed to be trained on non-standard TPU hardware, since — as the table notes — the data isn’t available, and the real power draw for training the model may be considerably lower than the benchmarks on alternate hardware. ↩

-

There are a lot of traps of over-generalization here. Performance is a significant reason why a model would run in a data center: if your local machine isn’t powerful enough to run the operation locally, that’s a reason to use data centers for cloud processing. But that’s not the only reason: secrecy and restriction are also reasons a company might force you to use a cloud version of their software, so that doesn’t necessarily indicate that the model requires more energy. And the “can run locally on your computer” category isn’t limited to traditional software; there are many AI models that fall into that category too, like Stable Diffusion. ↩

-

The taxonomy of the different classes of data centers is more complex than this if you’re deep in the industry, but for the purpose of this essay I’m drawing the line at dedicated professional facilities, which would include both commercial colocation facilities and truly vertically-integrated hyperscale centers like AWS runs. ↩

cyber

cyber