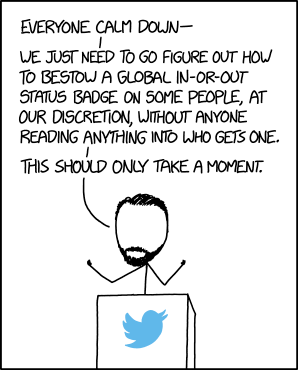

Recent tech trends have followed a pattern of being huge society-disrupting systems that people don’t actually want.

Worse, it then turns out there’s some reason they’re not just useless, they’re actively harmful.

While planned obsolescence means this applies to consumer products in general, the recent major tech fad hypes — cryptocurrency, “the metaverse”, artificial intelligence… — all seem to be comically expensive boondoggles that only really benefit the salesmen.

Monorail!

Monorail!

The most recent tech-fad-and-why-it’s-bad pairing seems to be AI and its energy use.

This product-problem combo has hit the mainstream as an evocative illustration of waste, with headlines like Google AI Uses Enough Electricity In 1 Second To Charge 7 Electric Cars and ChatGPT requires 15 times more energy than a traditional web search.

It’s a narrative that’s very much in line with what a disillusioned tech consumer expects.

There is a justified resentment boiling for big tech companies right now, and AI seems to slot in as another step in the wrong direction.

The latest tech push isn’t just capital trying to control the world with a product people don’t want, it’s burning through the planet to do it.

But, when it comes to AI, is that actually the case?

What are the actual ramifications of the explosive growth of AI when it comes to power consumption?

How much more expensive is it to run an AI model than to use the next-best method?

Do we have the resources to switch to using AI on things we weren’t before, and is it responsible to use them for that?

Is it worth it?

These are really worthwhile questions, and I don’t think the answers are as easy as “it’s enough like the last thing that we might as well hate it too.”

There are proportional costs we have to weigh in order to make a well-grounded judgement, and after looking at them, I think the energy numbers are surprisingly good, compared to the discourse.

Is AI eating all the energy? Part 2/2

Is AI eating all the energy? Part 2/2

Monorail!

Monorail! Apple's Trademark Exploit

Apple's Trademark Exploit

— insomnia club (@soft)

— insomnia club (@soft)

The Joy of RSS

The Joy of RSS

please don’t tell anyone how I live

please don’t tell anyone how I live