Update 2023: I won.

On August 5, 2021, Apple presented their grand new Child Safety plan. They promised “expanded protections for children” by way of a new system of global phone surveillance, where every iPhone would constantly scan all your photos and sometimes forward them to local law enforcement if it identifies one as containing contraband. Yes, really.

August 5 was a Thursday. This wasn’t dumped on a Friday night in order to avoid scrutiny, this was published with fanfare. Apple really thought they had a great idea here and expected to be applauded for it. They really, really didn’t. There are almost too many reasons this is a terrible idea to count. But people still try things like this, so as much as I wish it were, my work is not done. God has cursed me for my hubris, et cetera. Let’s go all the way through this, yet again.

Thu Aug 05 22:16:28 +0000 2021I am so deeply frustrated at how much we have to repeat these extremely basic principles because people just refuse to listen. Like, yes, we know. Everyone should know this by now. It’s mind boggling. twitter.com/sarahjamielewi…

The architectural problem this is trying to solve

Believe it or not, Apple actually does address a real architectural issue here. Half-heartedly addressing one architectural problem of many doesn’t mean your product is good, or even remotely okay, but they do at least do it. Apple published a 14 page summary of the problem model (starting on page 5). It’s a good read if you’re interested in that kind of thing, but I’ll summarize it here.

Client-side CSAM detection is designed to detect CSAM (child sex-abuse material, aka child porn) in personal photo libraries2 and message attachments. It does this by comparing images to a large database of known CSAM (more on this later). If an image is identified as known CSAM, it is sent to human moderators for review who can disable accounts and send evidence to law enforcement agencies.

The purpose of the “client-side” part of client-side scanning is that the scan and check are performed right on the phone (the client) without sending any private pictures to an internet-connected Apple server. It works like this: For each image, your phone generates a hash for that image with some sort of hashing algorithm. A hashing algorithm is a one-way function: given some input file, hashing it will always produce the same hash, on any device. However, you cannot reconstruct the original image from the hash. The only way to identify some query hash as belonging to an image is to have the image already, hash it, and compare that to the query.

Apple themselves cannot legally generate the hash database, because they cannot legally possess CSAM. Only NCMEC3 (the National Center for Missing & Exploited Children, a government non-profit) can legally possess CSAM and therefore generate the databases of known CSAM to check against. As a defence against non-CSAM being inserted to the database, Apple generates its database by looking at the intersection of multiple CSAM databases from multiple countries. Only hashes found in both lists will set off alarms.

There are two major problems with this. First, even with the protection of not sending Apple the content of your files, this system is still fundamentally really bad. Second, the system cannot work and the protections it tries to offer make people far, far more vulnerable to attack. More on both of these issues later.

This is really to sate governmental concerns

So, given that Apple prides itself on being a privacy-respecting company, why would they want to set up a system like this in the first place? Well, it makes a lot more sense if you look at this announcement in its political context.

iCloud photos “automatically keeps every photo and video you take in iCloud, so you can access your library from any device, anytime you want”. Because your iCloud data is encrypted end-to-end, though, your pictures aren’t stored in a viewable format anywhere except your own devices. Apple can’t read your data, hackers can’t read your data, and the government can’t read your data. This is the security provided by end-to-end encryption.

But there is a fierce war between the law enforcement community and user privacy. The Department of Justice despises true encryption and routinely pushes for “lawful access” in an attempt to criminalize encryption that would prevent foreign attackers (in this case, law enforcement officers, possibly with a warrant) from accessing a secure device.

There’s a reason it’s child abuse. It’s always child abuse. The rhetoric of child abuse is second only to “national security” in its potency in policy discussion. Child abuse is so thoroughly despised that it’s always the wedge used for some new method of policing. From US v. Coreas:

Child pornography is so repulsive a crime that those entrusted to root it out may, in their zeal, be tempted to bend or even break the rules. If they do so, however, they endanger the freedom of all of us.

All this (debunked) rhetoric of “think of the children” and “if you have nothing to hide you have nothing to fear” serves to manipulate public sentiment and institute law enforcement systems that presume guilt and lack due process.

So, when Facebook discussed using end-to-end encryption on their services in 2018, William “serial liar” Barr used child abuse to justify his open letter urging Facebook to leave its users vulnerable to scanning. Child safety quickly became one of DOJ’s favourite rhetorical devices. A full section of their lawful access page is dedicated to rhetoric about the dangers of online child exploitation. “If only you’d let us use your services as a panopticon surveillance network”, they say, “then we could keep the children safe.”

Apple doesn’t want to have its services turned into a panopticon surveillance network for law enforcement, but law enforcement keeps threatening to do so by force. The grotesquely-named “EARN IT Act” was a recent attempt to strong-arm platforms into disabling encryption, or else lose Section 230 immunity — a basic legal principle any web platform needs to stay in business.

If Apple comes up with some alternative way to handle CSAM, that might diffuse the DOJ’s favourite argument for seizing personal data. This is a diffusal and more; this is an olive branch to law enforcement. Apple gets a chance to show they’re making a legitimate effort to combat CSAM and let law enforcement prosecute peddlers.

The problem is olive branches to law enforcement are futile, because law enforcement has an infinite appetite. No police force will ever have enough power to be satisfied. Apple is hoping this will make the DOJ drop their objections about encrypting user data, but this is folly. Once Apple can do client-side scanning, the DOJ can make them scan iMessage, apps, Facebook. This doesn’t sate anybody, it just opens the door for law enforcement to demand more power.

Replying to matthew_d_green:Thu Aug 12 17:56:47 +0000 2021@hackermath @socrates1024 @mvaria @TheAaronSegal A lot of people in our field think they can negotiate some kind of deal with law enforcement. But we can’t, and many of the proposals I see people put forward don’t have good answers for what happens when law enforcement just renegotiates the technical countermeasure.

The Slippery slope

It’s very easy to write an article about how a thing might be a slippery slope. “Oh, no”, the lazy writer pens, “this isn’t bad, but I imagine it might become bad later!” But that’s not what I’m doing here, because you don’t have to imagine anything. A gambling addiction isn’t a “slippery slope” to crippling debt, crippling debt is a result of a gambling addiction. There’s no fallacy here, only well-understood cause and effect. To say understanding the consequences of this is to jump to conclusions is to say “I aimed my rifle and fired but what the bullet will do who can say”, and to say so should get anyone laughed out of polite society.

Apple’s threat model overview focuses on the threat of a secret attempt to add hashes to the DB (remember the intersecting hash database defence), but neglects the probability of overt attempts. Governments don’t have to use subterfuge to add hashes to a database, they have the law. There’s nothing here that would prevent Apple from substituting another hash database in a system update — in fact, Apple will have to update the database to keep up with new CSAM.

Replying to kurtopsahl:Fri Aug 13 19:40:26 +0000 2021Another realistic threat is a gov't, overtly in a public law, requiring providers to scan for and report matches with their own database of censored hashes (political, moral, etc), on pain of fines and arrest of local employees. Apple says it will refuse.

Sat Aug 14 05:30:58 +0000 2021In their new FAQ, Apple says they will refuse govt requests to use their CSAM-scanning tech to scan for other forms of content. How exactly will they refuse? Will they fight it in court? Will they pull out of the country entirely? This is not a time to get vague. twitter.com/kurtopsahl/sta…

If demanded to institute “government-mandated changes that degrade the privacy of users”, Apple says it will not, but that’s not nearly as strong a protection as saying it cannot. But, of course, Apple saying it will refuse government demands to change their policy is ludicrous.

One just has to look at the despots’ playbooks in India and Nigeria to see why. In an effort to quell political unrest, India has attempted to clamp down on digital services to censor protests and organization by instituting laws that demand social media delete any post the government requests. The removals demanded are frequently arbitrary and illegal.

Despite this, the Indian government has successfully roped Twitter and Facebook into censoring whatever sentiment the government wants to go away. Governments do this by demanding companies incorporate with a local presence (in Russia, too) and then threatening those local employees with violence if their demands aren’t met. The same happened with Google in Hong Kong, with Google making and breaking the same meaningless little pledge.

Apple isn’t shy about its commitment to adhere to all applicable laws everywhere it does business. Tim Cook himself recently confirmed this, explicitly, at trial. So Apple saying it will somehow be able to resist any amount of government pressure in order to protect user privacy is absurd. What makes it even more absurd is that Apple has literally already failed in exactly that.

In China, the government has forced Apple to store all the personal data of Chinese customers on servers run by a Chinese firm, unencrypted and physically managed by government employees. When Apple develops a new technology to scan encrypted content, the Chinese government getting their hands on that technology isn’t a theoretical risk. It’s a fact of life. In China, Apple works for the Chinese government. In the US, Apple works for the US government. Whatever tools Apple has are tools the state has. Any surveillance tools Apple builds for itself are tools Apple builds for the world’s despots.

Mon Aug 09 19:59:35 +0000 2021In a call today with Apple, we asked if China demanded Apple deploy its CSAM or a similar system to detect images other than CSAM (political, etc), would Apple pull out of that market? Apple speaker said that would be above their pay grade, and system not launching in China.

Fri Aug 06 18:35:24 +0000 2021Re-reading all of Apple's compromises in China to cater to the government there is interesting in light of the company making a system to scan for images stored on phones nytimes.com/2021/05/17/tec…

And, of course, the idea that Apple can resist government pressure is subverted, trivially, by the very fact that this very scanning feature is being developed as a result of government pressure. This story starts with Apple folding to government pressure from the very beginning.

But one might say: “So, then, what is the issue? If the government either will or won’t make Apple backdoor their software to let the state to use consumer iPhones as they wish, why fight against potentially beneficial uses of surveillance at all?”

Well, this idea that “there’s no stopping it” is fundamentally wrong, because it discounts the significant jump from “not existing” to “existing”. It is far easier for a state to force a company to let the state use a tool than it is to force a company to make a tool. A top-down mandate to build a backdoor where there wasn’t one before would leak, and people would resign. But if the software already exists, it just takes one executive decision to allow it. Heck, in states like China, where the military already controls the physical infrastructure, a surveillance apparatus could be seized by force. That just isn’t the case if the backdoor doesn’t exist.

And this is yet another scenario that literally happened. After the San Bernardino attack, Apple refused to assist the government in unlocking an encrypted iPhone. CEO Tim Cook wrote an open letter explaining that it did not have the tools to do this and would not create them:

Up to this point, we have done everything that is both within our power and within the law to help [law enforcement]. But now the U.S. government has asked us for something we simply do not have, and something we consider too dangerous to create. They have asked us to build a backdoor to the iPhone.

Specifically, the FBI wants us to make a new version of the iPhone operating system, circumventing several important security features, and install it on an iPhone recovered during the investigation. In the wrong hands, this software — which does not exist today — would have the potential to unlock any iPhone in someone’s physical possession.

The FBI may use different words to describe this tool, but make no mistake: Building a version of iOS that bypasses security in this way would undeniably create a backdoor. And while the government may argue that its use would be limited to this case, there is no way to guarantee such control.

It is crucial to this argument that the tool to perform this “unlock” does not exist. Apple never did unlock the device or build any tools to do so, but it would have been an entirely different story if it had tools it simply refused to use. Tools like that would be seized and used without allowing any of the due process that played out in the story. The question of whether the tool already exists or not is hugely significant, and must not be discounted.

Replying to SarahJamieLewis:Thu Aug 05 22:13:24 +0000 2021How long do you think it will be before the database is expanded to include "terrorist" content"? "harmful-but-legal" content"? state-specific censorship?

We also know that once government gets a new tool there’s no room for restraint. A similar case happened in the UK: ISPs created a system to scan for and block child-abuse image, but it was only a matter of years before the government demanded they use those same systems to block a wide range of politically-motivated content, going as far as censoring alleged trademark infringement. As the court explicitly says,

the ISPs did not seriously dispute that the cost of implementing a single website blocking order was modest. As I have explained above, the ISPs already have the requisite technology at their disposal. Furthermore, much of the capital investment in that technology has been made for other reasons, in particular to enable the ISPs to implement the IWF blocking regime and/or parental controls. Still further, some of the ISPs’ running costs would also be incurred in any event for the same reasons. It can be seen from the figures I have set out in paragraphs 61-65 above that the marginal cost to each ISP of implementing a single further order is relatively small, even once one includes the ongoing cost of keeping it updated.

That is to say, once you have a system, it’s not hard to add one more entry. Any old entry, even if the system was meant to be tightly scoped.

Replying to Snowden:Mon Aug 09 22:39:06 +0000 2021@alexstamos @matthew_d_green But look, every one of us understands that it really doesn't matter what Apple's claimed process protections are: once they create the capability, the law will change to direct its application.

Replying to Snowden:Mon Aug 09 22:42:36 +0000 2021@alexstamos @matthew_d_green The bottom line is that once Apple crafts a mechanism the mass surveillance of iPhones (no matter how carefully implemented), they lose the ability to determine what purposes it is used for.

That question passes to the worst lawmakers in the worst countries.

We know exactly how this works, down to a science. It’s happened before, it’s actively happening now, and it will happen to this.

And when the government does encroach on this, we won’t know. There won’t be a process, there won’t be restitution made when it’s found out. It took 12 years after 2001 to get hard evidence of mass warrantless surveillance. It took a full 19 for a court to even formally acknowledge the NSA collection program was illegal, and the repulsive laws that allowed it to spin up in the first place still haven’t been repealed. Oh, and even under a pro-censorship government that rubber-stamped even more NSA spying, it still managed to breach its scope.

When the current security executive decides to use Facebook or Twitter or Apple for their latest political goal, there will be no process or deliberation. It will just be yet another crushing violation in one fell swoop.

Replying to matthew_d_green:Thu Aug 05 02:36:50 +0000 2021Whether they turn out to be right or wrong on that point hardly matters. This will break the dam — governments will demand it from everyone.

And by the time we find out it was a mistake, it will be way too late.

Mass surveillance is always wrong

Mass surveillance — searching everyone’s data without a warrant, without probable cause, and without due process — is utterly abhorrent. It’s utterly unconstitutional and is in direct conflict with the human right to privacy and autonomy.

There’s a huge difference between published information and personal information. When you post something on Facebook, it’s published as in public. When you post something on Twitter, it’s published. (Yes, even on your private account.) It’s Twitter’s to disseminate now, not yours. Not so with your personal files. On-device photos not even received over iMessage, just backed up using Apple’s automatic backup system are not published.

Both common law and common sense understand the difference between these two contexts. While there’s room for debate on how published works should be moderated, there’s no expectation of privacy from the government there. But personal effects should never be searched — not by the government or a private corporation — without following the due process of documenting probable cause and having a warrant issued describing the particular artifact to be searched. The surveillance Apple proposes is a suspicionless search of personal effects, which is utterly unacceptable.

Apple’s proposition is a surveillance system. Apple sending out press releases focusing on the technical measures and the cryptography used is intentionally misleading. “It’s not surveillance, we’re hashing things and putting them in vouchers” is a distinction without a difference. Sure, they made a new system to perform surveillance, but it’s still surveillance, and that means it’s wrong for all the same reasons all surveillance is wrong.

Replying to SarahJamieLewis:Fri Aug 06 16:31:53 +0000 2021Yes, I am deliberately making this about principles rather than specific technology proposals. Talking about the technology is entering the debate on the wrong terms.

It doesn't matter *how* the robot works, it matters that the robot is in your house.

Replying to SarahJamieLewis:Fri Aug 06 23:16:12 +0000 2021When you boil it down, Apple has proposed your phone become black box that may occasionally file reports on you that may aggregate such that they contact the relevant authorities.

It doesn't matter how or why they built that black box or even what the false positive rate may be

Replying to SarahJamieLewis:Fri Aug 06 23:17:50 +0000 2021I just need you to understand that giving that black box any kind of legitimacy is a dangerous step to take, by itself, absent any other slips on the slop.

I called it a rubicon moment because that is what it is. There is no going back from that.

These surveillance systems will always compromise users’ security, and that becomes increasingly dangerous as the systems are scaled up. On the other hand, these systems will never be enough for the law enforcement types who want it — even the “good” ones who legitimately only want public safety.

The Columbia School of Engineering’s Bugs in our Pockets: The Risks of Client-Side Scanning (CSS) captures the issue very well in its summary (emphasis added):

…we argue that CSS neither guarantees efficacious crime prevention nor prevents surveillance. Indeed, the effect is the opposite. CSS by its nature creates serious security and privacy risks for all society while the assistance it can provide for law enforcement is at best problematic. There are multiple ways in which client-side scanning can fail, can be evaded, and can be abused.

Its proponents want CSS to be installed on all devices, rather than installed covertly on the devices of suspects, or by court order on those of ex-offenders. But universal deployment threatens the security of law-abiding citizens as well as lawbreakers. Technically, CSS allows end-to-end encryption, but this is moot if the message has already been scanned for targeted content. In reality, CSS is bulk intercept, albeit automated and distributed. As CSS gives government agencies access to private content, it must be treated like wiretapping. In jurisdictions where bulk intercept is prohibited, bulk CSS must be prohibited as well.

You can’t have privacy with a system like this in place

This is another point that should go without saying, but I’m going to say it anyway: you can’t have strong privacy while also surveillance every photo anyone takes and every message anyone sends. Those aren’t compatible ideas.

Nuance is important, and it’s worth having careful discussions about these ideas and this technology, but it’s important to not to lose high-level comprehension of the issue. When nuanced discussions start implying that with enough nuance, surveillance systems can be built so they can somehow only ever be used for good, it’s time to step back from the detailed language for a moment and put the issue back into perspective, because we already know that can’t be done. Nuance can’t get you out of that hole, and when it tries to the best it can do is deceive.

Surveillance and privacy are diametrically opposed. No amount of cryptography can reconcile that. Surveillance, like most powers, cannot be designed so it can only ever be used by good people for good things. In fact, we know that secret surveillance in particular is prone to some of the worst forms of abuse.

Apple positions itself as a privacy-oriented company. It’s a huge part of its marketing, yes, but Apple also took a real stand in the San Bernardino case. What’s more, Apple is using the importance of privacy right now as a defence for why they maintain an anti-competitive marketplace — to enforce strict privacy rules.

Replying to matthew_d_green:Fri Aug 06 21:22:34 +0000 2021The promise could not have been more clear.

Turning their entire platform into a mass surveillance apparatus compromises all of that. You can’t have it both ways. Going from “the privacy phone” to “the surveillance phone” is a bait-and-switch of incomparable scale.

Fri Aug 06 02:23:54 +0000 2021No matter how well-intentioned, @Apple is rolling out mass surveillance to the entire world with this. Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow.

They turned a trillion dollars of devices into iNarcs—*without asking.* twitter.com/Snowden/status…

In the words of Cory Doctorow,

Apple has a tactical commitment to your privacy, not a moral one. When it comes down to guarding your privacy or losing access to Chinese markets and manufacturing, your privacy is jettisoned without a second thought.

No one is giving away free Iphones [sic] in exchange for ads. You can pay $1,000 for your Apple product and still be the product.

Apple’s response

Replying to matthew_d_green:Tue Aug 10 20:39:21 +0000 2021People are telling me that Apple are “shocked” that they’re getting so much pushback from this proposal. They thought they could dump it last Friday and everyone would have accepted it by the end of the weekend.

Apple (corporate, not normal Apple employees) responded to scepticism by doubling down, because they’re a company and of course they did. First, they circulated language from NCMEC calling their critics “screeching voices of the minority”:

Marita Rodrigues, executive director of strategic partnerships at NCMEC: Team Apple,

I wanted to share a note of encouragement to say that everyone at NCMEC is SO PROUD of each of you and the incredible decisions you have made in the name of prioritizing child protection.

It’s been invigorating for our entire team to see (and play a small role in) what you unveiled today.

I know it’s been a long day and that many of you probably haven’t slept in 24 hours. We know that the days to come will be filled with the screeching voices of the minority.

Our voices will be louder.

After insulting the concerned, Apple had their Senior Vice President of Software Engineering Craig Federighi put out this little number:

Because it’s on the [iPhone], security researchers are constantly able to introspect what’s happening in Apple’s software. … So if any changes were made that were to expand the scope of this in some way — in a way we had committed to not doing — there’s verifiability, they can spot that that’s happening.

Now, everyone even remotely familiar with iPhone security knows this is a ludicrous claim to make, because until the week before the CSAM announcement, Apple had been in a vicious lawsuit against the security firm Corellium for the crime of doing exactly that1. Specifically, they argued that DMCA 1201 should outlaw pretty much any reverse engineering tool that could be used for security research outside Apple’s tightly controlled internal program that leaves researchers and users alike in the lurch.

Matt Tait of Corellium responded that “iOS is designed in a way that’s actually very difficult for people to do inspection of system services. … [Apple,] you’ve engineered your system so that they can’t. The only reason that people are able to do this kind of thing is despite you, not thanks to you.” David Thiel commented that “Apple has spent vast sums specifically to prevent this and make such research difficult.” Both are exactly right.

My first thought when I heard that Apple dropped the lawsuit just prior to the announcement was that it must have been a strategic move to defuse this argument. But no, just days later Apple filed an appeal to start a war with Corellium all over again. A generous interpretation would be that Apple’s right hand doesn’t know what its left is doing, but I’m convinced its head knows exactly what it’s doing: lying for profit. Because of course Federighi knows all that. He’s on the front lines of the fight to defend Apple’s closed ecosystem against scrutiny and legal attempts to empower users to assert their rights over their devices by installing their own software. And he’s willing to make himself into a cartoonish liar to keep that from happening.

Replying to elegant_wallaby:Fri Aug 13 18:36:16 +0000 2021This pivot is totally disingenuous. If @Apple wants to lean on the iOS security community as their independent verification, it needs to stop treating that community like enemies and let us properly unlock and examine devices. 4/4

New attacks using this technology

But is Apple at least right that this hashing technology can work? Also no.

Let’s take a closer look at that “hash the image and check if the hash is in the database” step. As I said earlier,

For each image, your phone generates a hash for that image with some sort of hashing algorithm. A hashing algorithm is a one-way function: given some input file, hashing it will always produce the same hash, on any device. However, you cannot reconstruct the original image from the hash. The only way to identify some query hash as belonging to an image is to have the image already, hash it, and compare that to the query.

Of particular note here is the hashing algorithm. When we talk about hashing algorithms, we usually mean a cryptographic hashing algorithm. A good cryptographic hashing algorithm has the following properties:

- Determinism: Any given input will always produce the same hash

- One-way: Starting with a input and computing the hash is easy and quick, but starting with a hash and computing a message that yields that hash value is infeasibly hard

- Collision resistance: Finding two different inputs that generate the same hash value is infeasibly hard

- Avalanche: Any change to the input, no matter how small, should result in a completely different hash. The new hash should be uncorrelated with the hash of the original image

However, when we want to compare images instead of bytes, we start valuing different criteria. We no longer care about the exact value of the input, we now care that images that look alike produce the same hash value. It’s important that you can’t just change one pixel in an image (or even just save it with a different format) and change the hash, foiling the desired fingerprinting effect. That means we want something with collision tolerance: similar images should produce the same hash, within some tolerance, even if they’re slightly different or saved in a different format. (Usually this also comes with a reverse avalanche effect.) This is called a perceptual hashing algorithm. The specific algorithm Apple is planning to use is NeuralHash, which has been reverse-engineered and can be tested today.

There are many secure, robust, precise cryptographic hashing algorithms available today. The same cannot be said of perceptual hashing algorithms because by design they can be neither precise nor secure; in order to maintain their collision tolerance, they need to be acceptably “fuzzy”. Once fuzziness is intentionally introduced into an algorithm, that algorithm can’t considered precise or secure enough to be used on highly sensitive security applications, such as procedurally determining whether or not someone is a sex criminal. That would be folly.

But a system using a fuzzy algorithm could still be effective at catching contraband images, though, if it were hard to manipulate an image to create an identical-looking image that produces a different perceptual hash. Bad news, it’s not. Here’s 60 lines of python that do just that for any perceptual hash function, courtesy of Hector Martin, produced very shortly after he was challenged to do exactly that. And this attack on perceptual hashing isn’t even implementation-specific. So that’s that; you can start with a contraband image and derive a new image that looks identical but isn’t detected. For all the harm this causes, it turns out it’s not even good at catching criminals.

@synopsi These two [images with different perceptual hashes] differ only in pixel coordinate (197, 201), which is #d3ccc8 and #d4ccc8 respectively. In fact, the raw RGB data only differs in one byte, by one.

That’s a collision with similar images, which is something we didn’t want. But what about collision resistance in the case of truly dissimilar images? Remember, this technology is designed to scan incoming images, too. So, if someone sends you an image that this algorithm flags, it’s a strike against you. So, if you start with CSAM hashes, can you produce a harmless-looking image whose hash matches against an entry in the CSAM database, in order to force a user to be flagged? Yes. In this regard, too, NeuralHash is completely broken. Anish Athalye developed a tool that will arbitrarily construct an input that generates a known hash. You don’t even need to start with real CSAM to use it to generate forced false positives.

Cryptography at this level is not one of my areas of expertise, so I’ll quote instead from Sarah Jamie Lewis’ excellent article about NeuralHash collision:

- preimage resistance: given y, it is difficult to find an x such that h(x) = y.

- second-preimage resistance: given x, it is difficult to find a second preimage z ≠ x such that h(x) = h(z).

Both of these have been fundamentally broken for NeuralHash. There is a now a tool by Anish Athalye which can trivially, when given a target hash y, can find an x such that h(x) = y.

Given such a tool constructing second pre-images is trivial, but even without it is important to note that because of the way NeuralHash works it is very easy to construct images such that h(x) = h(y).

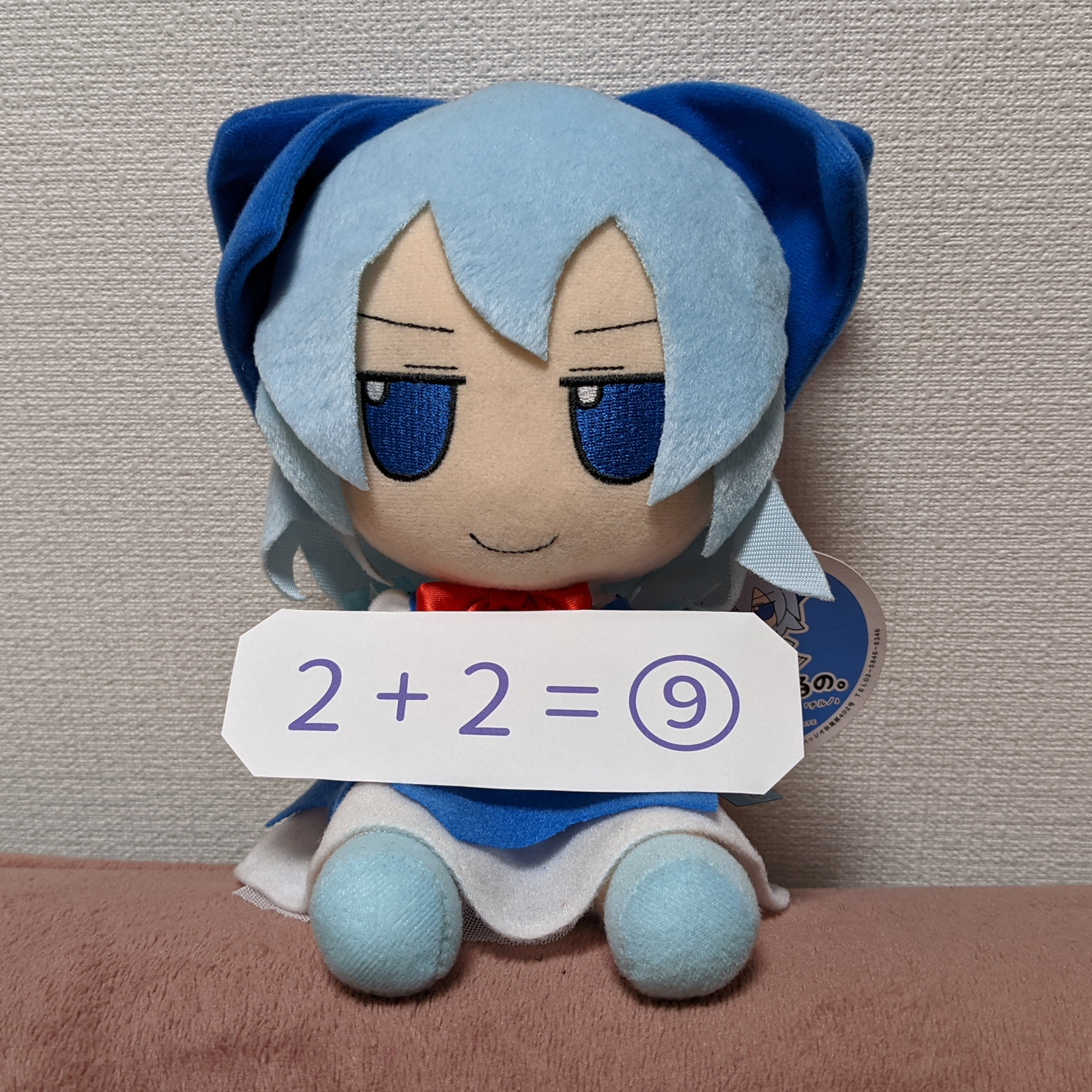

From left to right: a dog with hash D, a cat with hash C, and an constructed cat with hash D

What we have here is not a airtight cryptographic machine ready to weather the trial of being used to adjudicate the most sensitive criminal justice cases. We’ve instead been given a matching algorithm fuzzy enough for an attacker to misuse and yet complicated enough for false positives to be used as evidence against innocent people by a legal system not equipped to interrogate cryptographic evidence.

The Ends Justifies the Means

In modern political theory, we understand these things called “rights”. Rights are a special kind of inviolable demand that stand above utilitarian calculations. If someone has an absolute right to due process, the government absolutely must give them due process. It doesn’t matter how expedient it might be to go around unilaterally executing people, you absolutely cannot do it. That’s a hard line.

Another key principle: individual procedures have their own mandates. If a system was deployed under the strict legal requirement it only be used to solve a particular kind of crime, it must be kept qualified to that scope; it must never be used beyond that scope without an explicit mandate and authorization.

Modern governments respect neither of these things. Restricting tools to their scope is a restriction and to law enforcement, restrictions are abhorrent. To the authoritarian, their own feeling the need to use something should be authorization enough. Power is a convergent instrumental goal, perhaps even tautologically. Someone will focus in on the cause they care about the most and use whatever tools are available to pursue the outcome they view as right, because to them their cause is the most important thing. The discretionary judgement of the individual that some “good” could be done should be, in the eyes of law enforcement, all that matters. And they’re the ones behind the wheel.

This “ends justifies the means” utilitarian philosophy is extremely prevalent among those with the authoritarian and fascist mindsets. There is nothing quite so dangerous as a utilitarian who finds god. It wasn’t a coincidence that the mind who thought alcohol should be illegal thought poisoning drinkers was a good idea. Nor was it a surprise that the minds worked into a frenzy by militaristic nationalist rhetoric thought storming the capitol would be worth it to save America. Nor is it a surprise that ol’ Billy “I let Trump use the DOJ to spy on his political opponents and then lied about it to congress” Barr who wrote all those strong DOJ letters about the importance of banning encryption thinks part of his job is to use his position to keep federal prosecutors from challenging people associated with Trump. To these people, their cause is so infinitely important as to drown out all other concerns, all other principles, all other lives.

The government has a long history of doing this, using whatever tools are available to pursue whatever the current political ends are. Usually it’s something at least disguised as safety (in the case of NSA spying, encryption backdoors, wars against users’ safety to protect their documents against malicious actors including government and the like), but can escalate to absurdity in politically charged environments or wartime.

“We are obligated to use all the tools at our disposal to pursue our ends”, the utilitarian says. And, to them, the only limit on that disposal is what they can get away with — not what those tools are for, or how they were authorized. But that’s not how it works. It doesn’t matter who thinks it, or if there’s even consensus. That’s not how rights work.

Thu Aug 05 21:57:00 +0000 2021Basically, until you find a way to eradicate the philosophy” the end justifies the means” entirely, every universal policing system is a terrible idea, because people will use it beyond its scope for something they think is more important

This is why it’s critically important not to make these tools available in the first place. When it comes to technology — especially surveillance — this predictable dynamic arises where citizens are put in progressively more danger while law enforcement sets a stated goal to aggressively pursue further and further deployment of the technology at the people’s expense. It’s not a terribly complicated dynamic, but the conclusion is even simpler: you must not ever allow this category of technology. Surveillance is not a gap in law enforcement’s range for them to fill, it must be an absolute rule for them to work around. Forever.

Related reading

- Apple, Child Safety (Feature Announcement)

- Bugs in our Pockets: The Risks of Client-Side Scanning

- Mack DeGurin, “Infrastructure Bill’s Drunk Driving Tech Mandate Leaves Some Privacy Advocates Nervous”

- Brian Doherty, “Secret Police”

- Edward Snowden, “The All-Seeing “i”: Apple Just Declared War on Your Privacy”

Oh look, I was right

- Kashmir Hill, “A Dad Took Photos of His Naked Toddler for the Doctor. Google Flagged Him as a Criminal.” (2022)

- jwz, “Apple Has Begun Scanning Your Local Image Files Without Consent” (2023)

- Jeffrey Paul, “Apple Has Begun Scanning Your Local Image Files Without Consent”

- Group Attacking Apple Encryption Linked to Dark-Money Network

Perceptual hashing

Apple’s fight against openness

- Chaim Gartenberg, “‘Sideloading is a cyber criminal’s best friend,’ according to Apple’s software chief”

- Patrick Howell O’Neill, “Apple says researchers can vet its child safety features. But it’s suing a startup that does just that.”“

- Joseph Menn, “Apple appeals against security research firm while touting researchers”

- Jordy Zomer, “A story about an Apple and two fetches”

Crypto wars

- Riana Pfefferkorn, “The Earn It Act: How To Ban End-to-end Encryption Without Actually Banning It”

- Andi Wilson Thompson, “Separating the Fact from Fiction - Attorney General Barr is Wrong About Encryption”

- Ellen Nakashima, “Apple vows to resist FBI demand to crack iPhone linked to San Bernardino attacks”

- Tim Cook, “A Message to Our Customers”

- DOJ, Open Letter to Facebook

- Ina Fried, “DOJ and Apple reignite dispute over encryption”

- EFF, “Ninth Circuit: Surveillance Company Not Immune from International Lawsuit”

- Facebook’s Public Response To Open Letter On Private Messaging

- Mike Masnick, “Senate’s New EARN IT Bill Will Make Child Exploitation Problem Worse, Not Better, And Still Attacks Encryption”

- Mack DeGeurin, EARN IT Act Passes Senate Judiciary Committee (2022)

Abuse

- Diksha Madhok, “Indian police visit Twitter after it labels a tweet from Narendra Modi’s party”

- Selina Cheng, “Google handed user data to Hong Kong authorities despite pledge after security law was enacted”

- nytimes, “Censorship, Surveillance and Profits: A Hard Bargain for Apple in China”

- EFF, “NSA Spying Timeline”

- ACLU, “Surveillance under the USA/Patriot Act”

- Joseph Cox, “China Is Forcing Tourists to Install Text-Stealing Malware at its Border”

- Reuters, “Apple moves to store iCloud keys in China, raising human rights fears”

- Diksha Madhok, “Twitter is a mess in India. Here’s how it got there”

- Manish Singh, “Twitter restricts accounts in India to comply with government legal request”

- Diksha Madhok, “Silicon Valley is in a high-stakes standoff with India”

- Joanna Chiu, “A Chinese student in Canada had two followers on Twitter. He still didn’t escape Beijing’s threats over online activity”

- Reuters, “Putin signs law forcing foreign social media giants to open Russian offices”

- EFF, “Mass Surveillance Technologies”

Offline authoritarianism

Summary coverage & other opinions

- Matthew Green Twitter thread

- Paul Rosenzweig, “The Apple Client-Side Scanning System”

- Apple’s child protection features spark concern within its own ranks

- Kurt Opsahl, “If You Build It, They Will Come: Apple Has Opened The Backdoor”

- AccessNow Amicus Brief

- Apple Holic, “Apple’s botched CSAM plan shows need for digital rights”

- EFF, “Apple’s Plan to “Think Different” About Encryption Opens a Backdoor to Your Private Life”

- Zach Whittaker, “Apple confirms it will begin scanning iCloud Photos for child abuse images”

- WSJ, “Apple Executive Defends Tools to Fight Child Porn, Acknowledges Privacy Backlash”

- Zach Whittaker, “Apple delays plans to roll out CSAM detection in iOS 15 after privacy backlash”

Replying to matthew_d_green:Mon Nov 22 23:31:22 +0000 2021@zeynep @alexstamos My thought on David’s argument is that “encrypted social networks have a unique abuse problem, they need anti-abuse features that other data doesn’t” seems reasonable.

But here on Earth 1, companies are deploying those anti-abuse features into private photo backup services.

-

Of course, even if Apple weren’t actively suing the people they claim are the main safeguard users have against abuse, that wouldn’t make this a reasonable position to hold. Apple would still be asking auditors for free labour after spending decades setting them up to fail. It’s just that the reality is they’re also suing them, which is worse. ↩

-

This specifically applies to “iCloud Photos”, which is Apple’s default setting for storing and backing up images, even ones you take and don’t explicitly send anywhere. Your iCloud data, though, is encrypted, so even while you’re using iCloud services no one should have access to your photos or data without access to a logged-in device. More on this later. ↩

-

NCMEC, by the way, isn’t all sunshine and rainbows either. They’re an organization that can hoover up sexual images of children — taken without consent — and store them indefinitely in a database, with little oversight and no recourse for victims who want their information removed. ↩

cyber

cyber