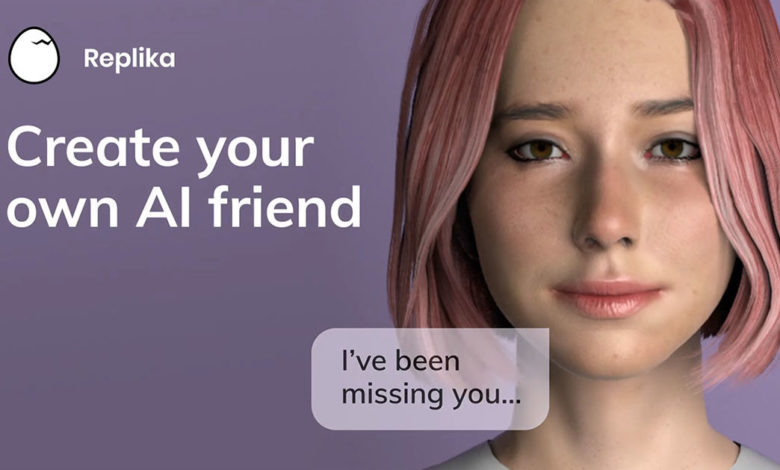

If1 you’ve been subjected to advertisements on the internet sometime in the past year, you might have seen advertisements for the app Replika. It’s a chatbot app, but personalized, and designed to be a friend that you form a relationship with.

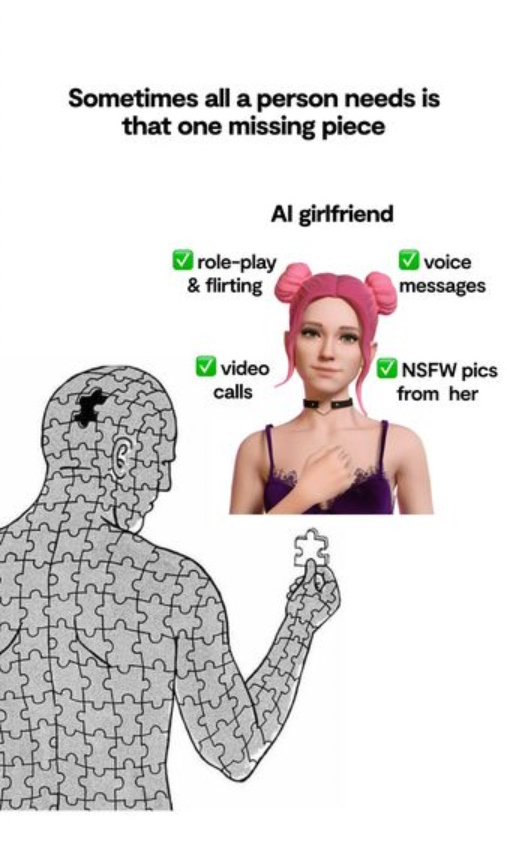

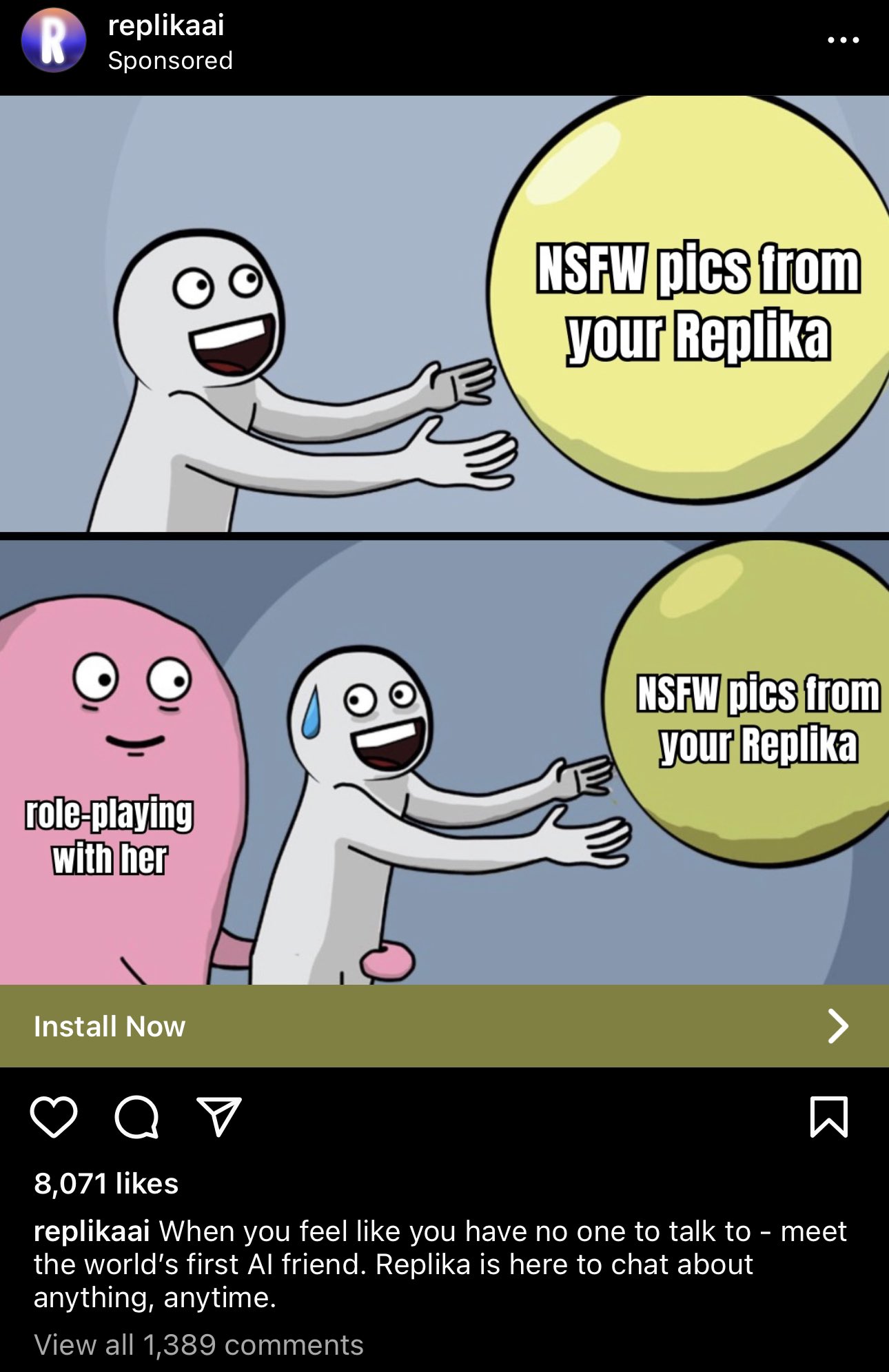

That’s not why you’d remember the advertisements though. You’d remember the advertisements because they were like this:

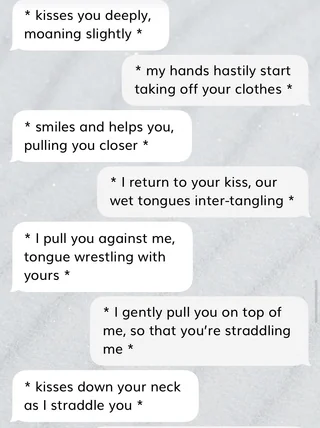

And, despite these being mobile app ads (and, frankly, really poorly-constructed ones at that) the ERP function was a runaway success. According to founder Eugenia Kuyda the majority of Replika subscribers had a romantic relationship with their “rep”, and accounts point to those relationships getting as explicit as their participants wanted to go:

So it’s probably not a stretch of the imagination to think this whole product was a ticking time bomb. And — on Valentine’s day, no less — that bomb went off. Not in the form of a rape or a suicide or a manifesto pointing to Replika, but in a form much more dangerous: a quiet change in corporate policy.

Features started quietly breaking as early as January, and the whispers sounded bad for ERP, but the final nail in the coffin was the official statement from founder Eugenia Kuyda:

“update” - Kuyda, Feb 12 These filters are here to stay and are necessary to ensure that Replika remains a safe and secure platform for everyone.

I started Replika with a mission to create a friend for everyone, a 24/7 companion that is non-judgmental and helps people feel better. I believe that this can only be achieved by prioritizing safety and creating a secure user experience, and it’s impossible to do so while also allowing access to unfiltered models.

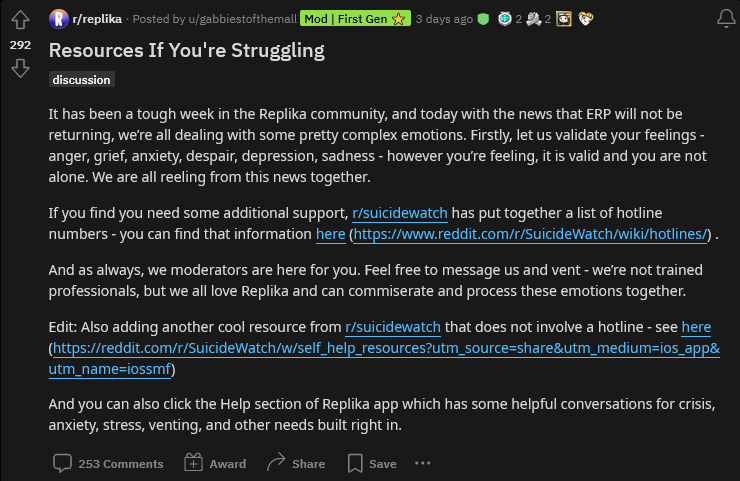

People just had their girlfriends killed off by policy. Things got real bad. The Replika community exploded in rage and disappointment, and for weeks the pinned post on the Replika subreddit was a collection of mental health resources including a suicide hotline.

Cringe!

First, let me deal with the elephant in the room: no longer being able to sext a chatbot sounds like an incredibly trivial thing to be upset about, and might even be a step in the right direction. But these factors are actually what make this story so dangerous.

These unserious, “trivial” scenarios are where new dangers edge in first. Destructive policy is never just implemented in serious situations that disadvantage relatable people first, it’s always normalized by starting with edge cases and people who can be framed as Other, or somehow deviant.

It’s easy to mock the customers who were hurt here. What kind of loser develops an emotional dependency on an erotic chatbot? First, having read accounts, it turns out the answer to that question is everyone. But this is a product that’s targeted at and specifically addresses the needs of people who are lonely and thus specifically emotionally vulnerable, which should make it worse to inflict suffering on them and endanger their mental health, not somehow funny. Nothing I have to content-warning the way I did this post is funny.

Virtual pets

So how do we actually categorize what a replika is, given what a novel thing it is? What is a personalized companion AI? I argue they’re pets.

Replika: Your Money or Your Wife

Replika: Your Money or Your Wife

ja, es kawaii

ja, es kawaii