My friend Floober brought some recent changes VRChat is making in chat, and I thought I’d jot down my thoughts.

The problem with the VRC economy is the same problem as with most “platform economies”: everyone is buying lots in a company town.

The Store

This was the precipitating announcement: VRChat releasing a beta for an in-game real-money store.

Paid Subscriptions: Now in Open Beta! — VRChat Over the last few years, we’ve talked about introducing something we’ve called the “Creator Economy,” and we’re finally ready to reveal what the first step of that effort is going to look like: Paid Subscriptions!

As it stands now, creators within VRChat have to jump through a series of complicated, frustrating hoops if they want to make money from their creations. For creators, this means having to set up a veritable Rube Goldberg machine, often requiring multiple external platforms and a lot of jank. For supporters, it means having to sign up for those same platforms… and then hope that the creator you’re trying to support set everything up correctly.

(The problem, of course, is that “frustrating jank” was designed by VRChat, and their “solution” is rentiering.)

Currently, the only thing to purchase is nebulous “subscriptions” that would map to different world or avatar features depending on the content. But more importantly, this creates a virtual in-game currency, and opens the door to future transaction opportunities. I’m especially thinking of something like an avatar store.

I quit playing VRChat two years ago, when they started to crack down on client-side modifications (which are good) by force-installing malware (which is bad) on players’ computers. Since then I’ve actually had a draft sitting somewhere about software architecture in general, and how you to evaluate whether it’s safe or a trap. And, how just by looking at the way VRChat is designed, you can tell it’s a trap they’re trying to spring on people.

The Store of Tomorrow

Currently, the VRC Creator Economy is just a currency store and a developer api. Prior to this, there was no way for mapmakers to “charge users” for individual features; code is sandboxed, and you only know what VRC tells you, so you can’t just check against Patreon from within the game1.

But the real jackpot for VRC is an avatar store. Currently, the real VRC economy works by creators creating avatars, maps, and other assets in the (mostly-)interchangeable Unity format, and then selling those to people. Most commonly this is seen in selling avatars, avatar templates, or custom commissioned avatars. Users buy these assets peer-to-peer.

This is the crucial point: individuals cannot get any content in the game without going through VRC. When you play VRChat, all content is streamed from VRChat’s servers anonymously by the proprietary client. There are no URLs, no files, no addressable content of any kind. (In fact, in the edge cases where avatars are discretely stored in files, in the cache, users get angry because of theft!) VRChat isn’t a layer over an open protocol, it’s its own closed system. Even with platforms like Twitter, at least there are files somewhere. But VRChat attacks the entire concept of files, structurally. The user knows nothing and trusts the server, end of story.

Notes on the VRC Creator Economy

Notes on the VRC Creator Economy

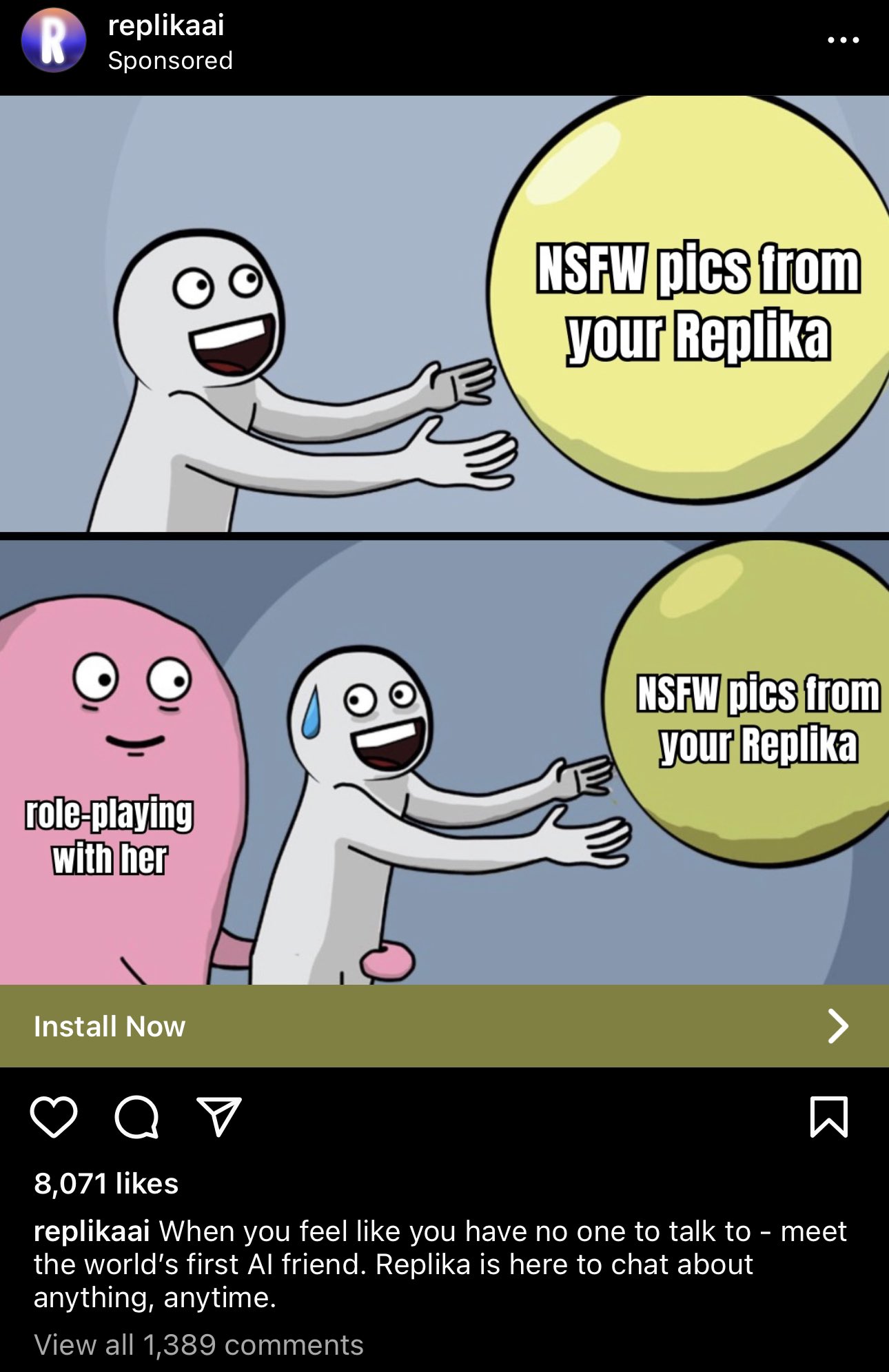

Replika: Your Money or Your Wife

Replika: Your Money or Your Wife