Recently we’ve seen sweeping attempts to censor the internet. The UK’s “Online Safety Act” imposes sweeping restrictions on speech and expression. It’s disguised a child safety measure, but its true purpose is (avowedly!) intentional control over “services that have a significant influence over public discourse”. And similar trends threaten the US, especially as lawmakers race to more aggressively categorize more speech as broadly harmful.

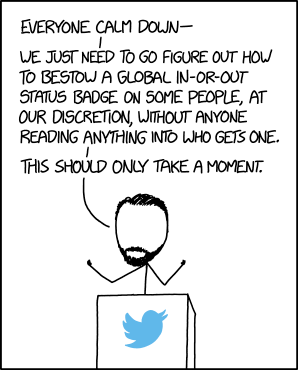

A common response to these restrictions has been to dismiss them as unenforceable: that’s not how the internet works, governments are foolish for thinking they can do this, and you can just use a VPN to get around crude attempts at content blocking.

But this “just use a workaround” dismissal is a dangerous, reductive mistake. Even if you can easily defeat an attempt to impose a restriction right now, you can’t take that for granted.

Dismissing technical restrictions as unenforceable

There is a tendency, especially among technically competent people, to use the ability to work around a requirement as an excuse to avoid dealing with it. When there is a political push to enforce a particular pattern of behavior — discourage or ban something, or make something socially unacceptable — there is an instinct for clever people with workarounds to respond with “you can just use my workaround”.

I see this a lot, in a lot of different forms:

- “Geographic restrictions don’t matter, just use a VPN.”

- “Media preservation by the industry doesn’t matter, just use pirated copies.”

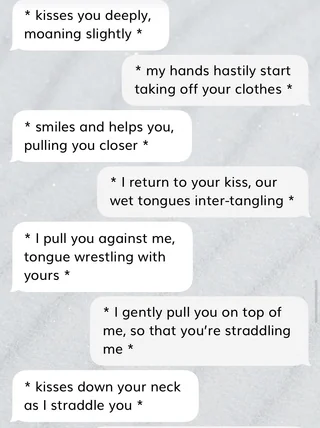

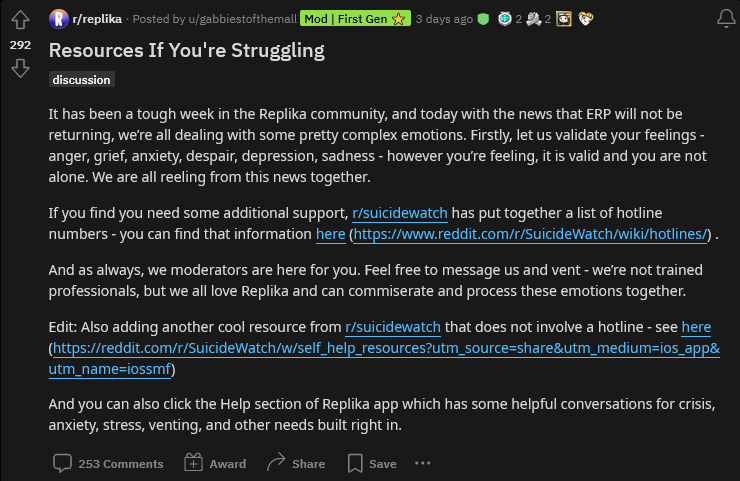

- “The application removing this feature doesn’t matter, just use this tool to do it for you.”

- “Don’t pay for this feature, you can just do it yourself for free.1”

- “It’s “inevitable” that people will use their technology as they please regardless of the EULA.”

- “Issues with digital ownership? Doesn’t affect me, I just pirate.”

A Hack is Not Enough

A Hack is Not Enough

Monorail!

Monorail!